Introducing: Our Brand New Dashboard

We’ve been working hard these past two months, ever since we got our previous release out the door. This time, we invested a lot of time and thought on the small items. And one big item as well.

All over the service, you’ll notice some slight changes to the UI. This is an ongoing process to fine-tune the service and make it simpler to use for our customers.

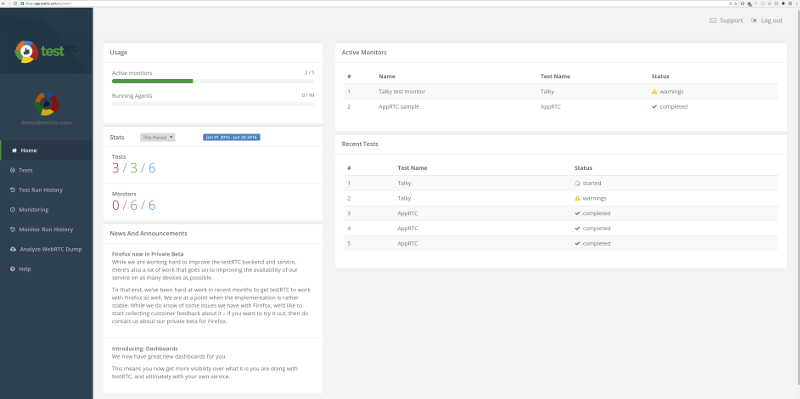

The biggest visible addition to our latest release is the introduction of a new user dashboard.

From now one, when a user logs in, he gets a bird’s eye view of his activities in testRTC:

Vive la Experiencia de Apuestas Más Emocionante con Yajuego Colombiano!

¿Estás listo para vivir la experiencia de apuestas más emocionante? En Yajuego Colombiano, podrás sumergirte en un mundo lleno de adrenalina y diversión, donde las apuestas se convierten en una verdadera aventura. En este artículo, descubrirás todo lo que necesitas saber sobre esta plataforma de apuestas en línea y por qué es la opción ideal para aquellos que buscan una experiencia única.

Desde una amplia variedad de juegos de casino hasta apuestas deportivas en tiempo real, Yajuego Colombiano ofrece una gama completa de opciones para satisfacer todos los gustos y preferencias. Además, cuenta con licencia y regulación en Colombia, lo que garantiza un ambiente seguro y confiable para todos los usuarios. Ya sea que estés interesado en probar tu suerte en las máquinas tragamonedas, desafiar a otros jugadores en emocionantes partidas de póker o apostar en tus equipos favoritos, Yajuego Colombiano tiene todo lo que necesitas para una experiencia de apuestas inigualable. ¡No esperes más y descubre todo lo que esta plataforma tiene para ofrecerte!

Descubre la emoción de las apuestas en línea con Yajuego Colombiano

Vive la experiencia de apuestas más emocionante con Yajuego Colombiano. En Yajuego, te ofrecemos una plataforma de apuestas en línea segura y confiable, donde podrás disfrutar de una amplia variedad de juegos y apuestas deportivas. Nuestro objetivo es brindarte la mejor experiencia de entretenimiento, con opciones para todos los gustos y preferencias.

En Yajuego, encontrarás una amplia selección de juegos de casino, desde las clásicas máquinas tragamonedas hasta emocionantes mesas de blackjack y ruleta. Además, podrás apostar en tus deportes favoritos, con una amplia gama de opciones y mercados disponibles. Nuestro equipo de expertos se encarga de ofrecerte las mejores cuotas y promociones, para que puedas maximizar tus ganancias.

Confía en Yajuego Colombiano para vivir la emoción de las apuestas en línea. Nuestra plataforma cuenta con todas las medidas de seguridad necesarias para proteger tus datos personales y transacciones. Además, nuestro equipo de atención al cliente está disponible las 24 horas del día, los 7 días de la semana, para brindarte el mejor soporte en caso de cualquier consulta o inconveniente. ¡Únete a Yajuego y vive la emoción de apostar hoy mismo!

Variedad de juegos y opciones para todos los gustos en Yajuego Colombiano

Vive la experiencia de apuestas más emocionante con Yajuego Colombiano! Descubre la adrenalina de apostar en tus deportes favoritos y disfruta de una amplia variedad de juegos de casino en línea. Con Yajuego, tienes la oportunidad de ganar grandes premios y vivir momentos llenos de emoción y diversión.

No te pierdas la oportunidad de aprovechar el código promocional Yajuego para obtener increíbles bonificaciones y beneficios adicionales. Este código te permitirá acceder a promociones exclusivas y aumentar tus posibilidades de ganar. ¡No esperes más y únete a la comunidad de apostadores de Yajuego para vivir la experiencia de apuestas más emocionante en Colombia!

Yajuego Colombiano te ofrece una plataforma segura y confiable para disfrutar de tus apuestas en línea. Con una amplia selección de deportes y juegos de casino, siempre encontrarás algo que se ajuste a tus gustos y preferencias. No importa si eres un experto en apuestas o si estás comenzando, Yajuego te brinda todas las herramientas necesarias para que vivas una experiencia única y emocionante. ¡Regístrate hoy y utiliza el código promocional Yajuego para empezar a disfrutar de todas las ventajas que esta plataforma tiene para ofrecerte!

Vive la experiencia de apuestas seguras y confiables con Yajuego Colombiano

¡Vive la experiencia de apuestas más emocionante con Yajuego Colombiano! En Yajuego, te ofrecemos una plataforma de apuestas en línea que te brinda la oportunidad de disfrutar de una amplia variedad de juegos y actividades emocionantes. Ya sea que te guste apostar en deportes, jugar a las tragamonedas o probar tu suerte en el casino en vivo, tenemos todo lo que necesitas para vivir una experiencia de apuestas inolvidable.

Nuestro objetivo en Yajuego es proporcionarte un entorno seguro y confiable para que puedas disfrutar de tus apuestas sin preocupaciones. Contamos con licencia y regulación en Colombia, lo que significa que cumplimos con los más altos estándares de seguridad y protección de datos. Además, nuestra plataforma es fácil de usar y está diseñada para ofrecerte una experiencia de juego fluida y sin complicaciones.

En Yajuego, también te ofrecemos una amplia gama de promociones y bonificaciones para que puedas maximizar tus ganancias. Desde bonos de bienvenida hasta promociones exclusivas, siempre encontrarás algo emocionante que te mantendrá entretenido. ¡Así que no esperes más y únete a la emoción de las apuestas en Yajuego Colombiano!

Bonificaciones y promociones exclusivas para maximizar tu diversión en Yajuego Colombiano

¡Vive la experiencia de apuestas más emocionante con Yajuego Colombiano! Si eres amante de la adrenalina y la emoción de las apuestas deportivas, no puedes dejar pasar la oportunidad de unirte a Yajuego. Con una amplia variedad de deportes disponibles para apostar, desde fútbol hasta baloncesto y tenis, encontrarás siempre el evento perfecto para disfrutar al máximo.

Además, Yajuego te ofrece una plataforma segura y confiable para realizar tus apuestas. Con su interfaz fácil de usar y su sistema de pagos seguro, puedes estar tranquilo de que tus ganancias estarán protegidas. No importa si eres principiante o un apostador experimentado, Yajuego te brinda todas las herramientas necesarias para que puedas disfrutar de la emoción de las apuestas sin preocupaciones.

Yajuego también se destaca por sus increíbles promociones y bonificaciones. Desde bonos de bienvenida hasta promociones especiales para eventos deportivos, siempre encontrarás una oferta que se adapte a tus necesidades. Además, Yajuego cuenta con un equipo de atención al cliente disponible las 24 horas del día, los 7 días de la semana, para resolver cualquier duda o problema que puedas tener.

No pierdas más tiempo y únete a la comunidad de Yajuego Colombiano. Vive la emoción de las apuestas deportivas y disfruta de una experiencia única. ¡Regístrate ahora y comienza a ganar con Yajuego!

Soporte al cliente excepcional para una experiencia de apuestas sin igual en Yajuego Colombiano

Vive la experiencia de apuestas más emocionante con Yajuego Colombiano! Si eres amante de las apuestas deportivas y los juegos de casino, Yajuego es tu mejor opción en Colombia. Con una plataforma fácil de usar y una amplia variedad de opciones de apuestas, Yajuego te brinda la emoción y diversión que estás buscando.

En Yajuego, podrás apostar en tus deportes favoritos, desde fútbol hasta baloncesto y tenis. Además, podrás disfrutar de una amplia selección de juegos de casino, como tragamonedas, ruleta y blackjack. Con las mejores cuotas del mercado y promociones exclusivas, Yajuego te ofrece la oportunidad de ganar grandes premios mientras te diviertes.

Además, Yajuego cuenta con un equipo de atención al cliente disponible las 24 horas del día, los 7 días de la semana, para resolver cualquier duda o consulta que puedas tener. Con métodos de pago seguros y rápidos, podrás realizar tus depósitos y retiros de manera fácil y confiable. No esperes más y vive la emoción de las apuestas con Yajuego Colombiano.

En conclusión, Yajuego Colombiano ofrece la experiencia de apuestas más emocionante que podrás encontrar en el mercado. Con su amplia variedad de juegos, bonificaciones y promociones, te garantizamos que nunca te aburrirás. Además, su plataforma segura y confiable te brinda la tranquilidad de saber que tus datos personales y transacciones están protegidos. Ya sea que prefieras las apuestas deportivas, los juegos de casino o las tragamonedas en línea, Yajuego tiene todo lo que necesitas para vivir la emoción de apostar. ¡No esperes más y únete a la diversión en Yajuego Colombiano hoy mismo!

What can you see on the dashboard?

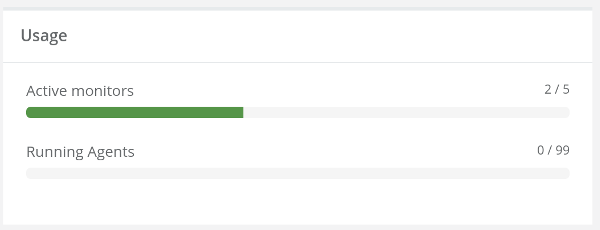

Usage

This area of the dashboard highlights the usage done in the account.

It allows you to understand what resources are available to you, so if you want to run a stress test, you will be able to use enough browsers.

If you want to do ad-hoc testing with more browsers than are available in your account, you’ll need to holler us and we’re enable more browsers on your account for a period of time.

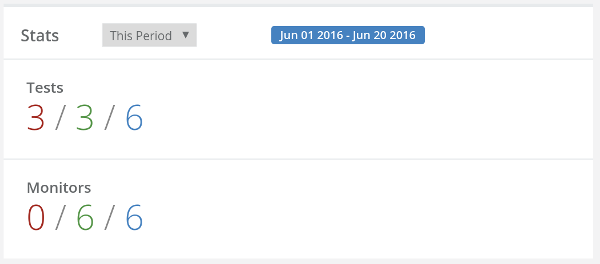

Stats

This area shows the statistics of your use over a span of time. It is quite useful for managers to understand how many tests were conducted and know how they fared.

- In red, we indicate tests and monitor executions that failed for the period selected

- In green, we indicate tests and monitor executions that succeeded for the period selected

- In blue, we indicate the total number of tests and monitor executions for the period selected

And you can select a different period to look at.

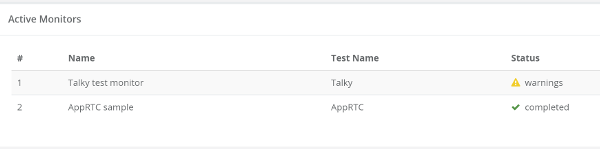

Active Monitors

This area indicate what monitors are up and running at the moment, along with the status of the most recent execution.

If you click on any of the rows, it will get you to the monitor run results, filtered for that specific monitor.

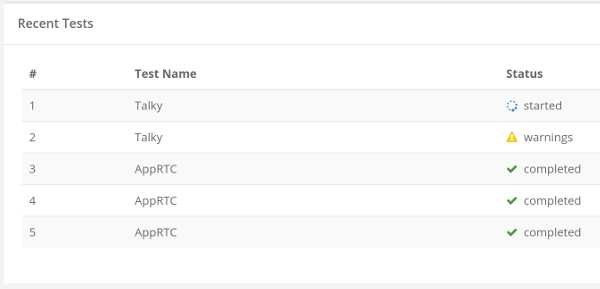

Recent Tests

This area shows the last 5 tests that got executed, along with their results.

As with the active monitors, clicking on the test gets you to the results themselves.

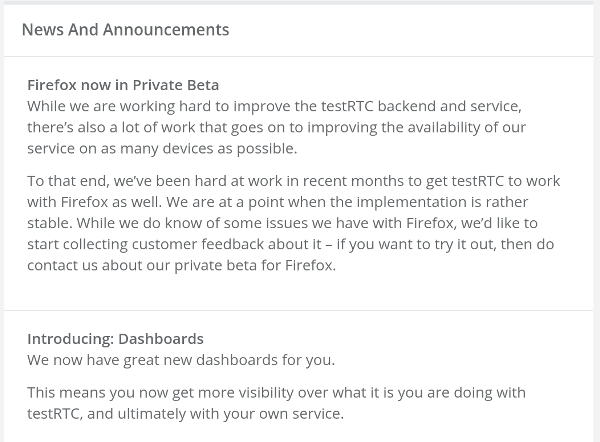

News and Announcements

This area shows some news and announcements we have for our users.

What’s Next?

Consider the dashboard a work in progress. We’re sure there’s much to be improved here. We wanted to get this out the door and into the hands of our users. Ping us if you have any suggestions on how to improve it.

If you need to test or monitor a WebRTC product – don’t be shy – sign up for testRTC.