Indicate expected outcome for the specific probe execution. This is used to decide if a test has successfully passed or failed.

The condition is evaluated at the end of the test, based on collected information and metrics. The command can be placed anywhere in the script and can appear multiple times with different constraint values.

testRTC offers additional assertion and expectation commands.

Arguments

| Name | Type | Description |

| criteria | string | The criteria to test. See below for the available options |

| message | string | Message to invoke if criteria isn’t met |

| level | string | Level of expectation:

|

Criteria

A criteria is comprised of the metric to test, an operator and a value.

For example: “video.in > 0” will evaluate that the number of incoming video channels is greater than 0.

Operators

The available operators for the criteria are:

- ==

- >

- <

- >=

- <=

- !=

Criteria metrics

The criteria is defined as a chained definition of the object we wish to evaluate, and depends on the metric type we wish to access.

Probe metrics

Probe metrics are general metrics related to the test results.

| Evaluated criteria | Description and example |

| connectionDuration | Evaluate the duration of the whole peer connection session in seconds “connectionDuration > 60” |

| score | Evaluate the score value of the whole test “score > 5” |

| mos | Evaluate the MOS audio score of the whole test “mos > 3” |

| Time | Evaluate the value of a timer, managed using the function .rtcGetTimer() |

| Metric | Evaluate the value of a metric, managed using the function .rtcSetMetric() |

Performance metrics

Performance metrics are those collected from the machine level: CPU, memory and network.

All metrics here are calculated as averages throughout the duration of the test. You can add .min or .max as a postfix to check against minimum and maximum values. For example, performance.browser.cpu.max indicates the maximum percentage of the CPU used during the test.

| Evaluated criteria | Description and example |

|---|---|

| performance.browser.cpu | Percentage of CPU cores used by the browser during the test. “performance.browser.cpu < 0.85” |

| performance.browser.memory | Amount of memory used by the browser during the test. Memory is expressed in Megabytes. “performance.browser.memory < 800” |

| performance.probe.cpu | Percentage of CPU cores used by the probe during the test. |

| performance.probe.memory | Amount of memory used by the probe during the test. Memory is expressed in Megabytes. |

Media metrics

Media metrics relate to audio and video channels. They follow this hierarchy chain for the metric name to use:

Media type (audio / video) . Direction (in / out) . channels . Evaluated criteria

For example: audio.in.channel.bitrate

The channels level is optional, i.e. both formats will work:

- video.in.bitrate – Evaluates the total bitrate of all incoming video channels

- audio.out.channel.bitrate – Evaluates the bitrate of each outgoing audio channel separately

| Evaluated criteria | Description and example |

| Number of channels | Evaluate the number of channels “video.out >= 1” |

| Channel is empty | Evaluate if the total number of sent/received packets is zero “video.in.empty == 0” |

| Bitrate | “audio.out.bitrate >= 30” – evaluate the TOTAL aggregated bitrate of all outgoing audio channels “audio.out.channel.bitrate > 30” – evaluate the value of EACH outgoing audio channel’ bitrate (evaluating all channels, per channel). Also supports min/max postfix: “video.out.bitrate.max < 500” or “audio.in.bitrate.min > 30” |

| Packet loss | Evaluate channels’ packet loss (in %) “audio.in.packetloss < 2” |

| Packets lost | Evaluate the number of packets lost on the channel “audio.in.packetlost < 20” |

| Data | Evaluate total amount of sent/received bytes in the whole session |

| Packets | Evaluate the total number of packets sent/received |

| Roundtrip | Evaluate the average round trip time”audio.out.channel.roundtrip < 100″ Also supports min/max postfix: “video.out.roundtrip.max < 200” Available only for outgoing channels. |

| Jitter | Evaluate the average jitter value on the network (based on jitterReceived getstats metric) “audio.in.jitter < 200” Also supports min/max postfix: “audio.in.jitter.max < 500” or “audio.in.jitter.min > 10” Available only for incoming channels. For video, this checks for jitterBufferMs since Chrome doesn’t provide incoming video jitter values. |

| Codec | Evaluate the codec name “audio.out.codec.channel == ‘OPUS'” “audio.out.codec == ‘opus,vp8′” |

| FPS | Evaluate frames per second.

|

| Width | Evaluate video resolution width “video.out.channel.resolution.width > 320” This criteria is valid only for video channels. |

| Height | Evaluate video resolution height “video.out.channel.resolution.height > 240” This criteria is valid only for video channels. |

Complex expectations

You can also use the boolean operators and or or to build more complex expectations.

An example of using it is when you want to check for a certain threshold only on some of the channels. Assume for example that you have any incoming channels, but some of them are muted so they have no data flowing on them. But you still want to test for frame rate. Here’s how you can now do that:

client.rtcSetTestExpectation("video.in.channel.bitrate == 0 or video.in.channel.fps > 0");Code language: JavaScript (javascript)Code examples

General examples

Listed below are general examples of how you can use test expectations in your code:

client

// The session was longer than 60 seconds

.rtcSetTestExpectation("connectionDuration > 60")

// We have both an incoming and an outgoing channel

.rtcSetTestExpectation("audio.in >= 1")

.rtcSetTestExpectation("audio.out >= 1")

.rtcSetTestExpectation("video.in >= 1")

.rtcSetTestExpectation("video.out >= 1")

// We have no empty channels

.rtcSetTestExpectation("audio.in.empty == 0")

.rtcSetTestExpectation("audio.out.empty == 0")

.rtcSetTestExpectation("video.in.empty == 0")

.rtcSetTestExpectation("video.out.empty == 0")

.rtcSetTestExpectation("audio.in.bitrate >= 35")

.rtcSetTestExpectation("audio.in.bitrate <= 45")

.rtcSetTestExpectation("audio.in.bitrate.max <= 50", "warn")

.rtcSetTestExpectation("audio.out.bitrate >= 35")

.rtcSetTestExpectation("audio.out.bitrate <= 45")

.rtcSetTestExpectation("audio.out.bitrate.max <= 50", "warn")

.rtcSetTestExpectation("audio.in.channel.bitrate > 35")

.rtcSetTestExpectation("audio.out.channel.bitrate > 35")

.rtcSetTestExpectation("audio.in.channel.bitrate < 45")

.rtcSetTestExpectation("audio.out.channel.bitrate < 45")

// Packet Loss is less than 2%

.rtcSetTestExpectation("audio.in.packetloss <= 2")

.rtcSetTestExpectation("audio.out.packetloss <= 2")

.rtcSetTestExpectation("audio.in.channel.packetloss <= 2")

.rtcSetTestExpectation("audio.out.channel.packetloss <= 2")

.rtcSetTestExpectation("audio.in.channel.data >= 6000")

.rtcSetTestExpectation("audio.out.channel.data >= 6000")

.rtcSetTestExpectation("audio.in.channel.packets >= 600")

.rtcSetTestExpectation("audio.out.channel.packets >= 600")

.rtcSetTestExpectation("audio.out.roundtrip <= 100")

.rtcSetTestExpectation("audio.out.channel.roundtrip <= 100")

.rtcSetTestExpectation("audio.out.roundtrip.max < 150", "warn")

.rtcSetTestExpectation("audio.in.jitter < 200")

.rtcSetTestExpectation("audio.in.channel.jitter < 90")

.rtcSetTestExpectation("audio.in.jitter.max < 120", "warn")

.rtcSetTestExpectation("audio.in.codec == 'OPUS'")

.rtcSetTestExpectation("audio.out.codec == 'OPUS'")

// Adding video channel checks

.rtcSetTestExpectation("video.in.bitrate >= 200")

.rtcSetTestExpectation("video.in.bitrate < 500")

.rtcSetTestExpectation("video.in.bitrate.max <= 650", "warn")

.rtcSetTestExpectation("video.in.bitrate.min >= 150", "warn")

.rtcSetTestExpectation("video.out.bitrate >= 200")

.rtcSetTestExpectation("video.out.bitrate < 500")

.rtcSetTestExpectation("video.out.bitrate.max < 650", "warn")

.rtcSetTestExpectation("video.out.bitrate.min >= 100", "warn")

.rtcSetTestExpectation("video.in.channel.bitrate >= 200")

.rtcSetTestExpectation("video.out.channel.bitrate >= 200")

.rtcSetTestExpectation("video.in.channel.bitrate < 500")

.rtcSetTestExpectation("video.out.channel.bitrate < 500")

// Packet Loss is less than 2%

.rtcSetTestExpectation("video.in.packetloss <= 2")

.rtcSetTestExpectation("video.out.packetloss <= 2")

.rtcSetTestExpectation("video.in.channel.packetloss <= 2")

.rtcSetTestExpectation("video.out.channel.packetloss <= 2")

.rtcSetTestExpectation("video.in.channel.data >= 7000")

.rtcSetTestExpectation("video.out.channel.data >= 7000")

.rtcSetTestExpectation("video.in.channel.packets >= 700")

.rtcSetTestExpectation("video.out.channel.packets >= 700")

.rtcSetTestExpectation("video.out.roundtrip <= 100")

.rtcSetTestExpectation("video.out.channel.roundtrip < 100")

.rtcSetTestExpectation("video.out.roundtrip.max < 120", "warn")

.rtcSetTestExpectation("video.in.jitter < 100")

.rtcSetTestExpectation("video.in.channel.jitter < 100")

.rtcSetTestExpectation("video.in.jitter.max < 120", "warn")

.rtcSetTestExpectation("video.in.codec == 'vp8'")

.rtcSetTestExpectation("video.out.codec == 'vp8'")

.rtcSetTestExpectation("video.in.fps <= 35")

.rtcSetTestExpectation("video.out.fps <= 35")

.rtcSetTestExpectation("video.in.fps >= 25")

.rtcSetTestExpectation("video.out.fps >= 25")

.rtcSetTestExpectation("video.in.channel.fps >= 10")

.rtcSetTestExpectation("video.out.channel.fps >= 10")

.rtcSetTestExpectation("callSetupTime < 50 ")

.rtcSetTestExpectation("audio.in.channel.bitrate.drop < 50", "warn")

.rtcSetTestExpectation("audio.out.channel.bitrate.drop < 50", "warn")

.rtcSetTestExpectation("video.in.channel.bitrate.drop < 50", "warn")

.rtcSetTestExpectation("video.out.channel.bitrate.drop < 50", "warn");Code language: PHP (php)Error example

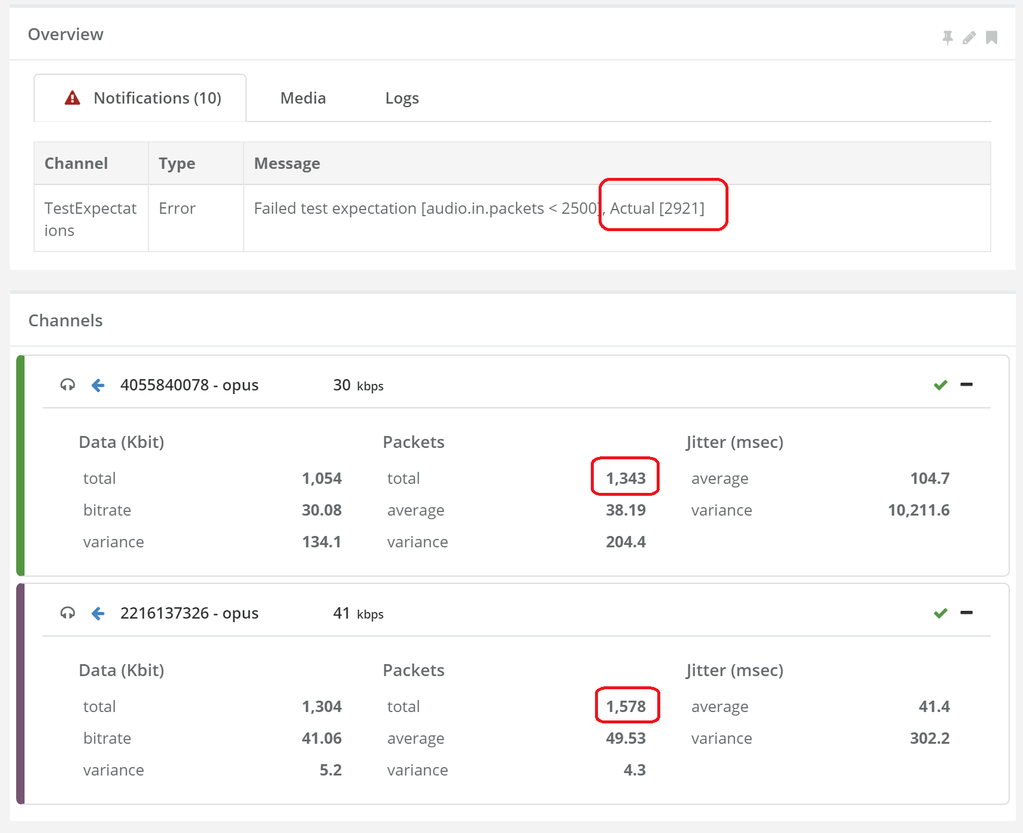

Below is an example of how an error will show in the report:

client.rtcSetTestExpectation("audio.in.packets < 2500");Code language: CSS (css)

Different expectations for different probes

The following example shows how expectations can be used based on scope. In this case, different expectations for different probes in the same test run:

if (agentType === 1)

{

client.rtcSetTestExpectation("video.out.channel.bitrate > 0");

// video.OUT.channel.bitrate > 0

// will be evaluated only for the code here

}

else // agentType is not 1

{

client.rtcSetTestExpectation("video.in.channel.bitrate > 0");

// video.IN.channel.bitrate > 0

// will be evaluated only for the code here

}Code language: JavaScript (javascript)