The summer is now behind us and it is time to introduce our latest release of testRTC 🥳

Besides the usual slew of features and improvements, we focused on two big areas:

- More powerful performance testing and monitoring – there is now a new performance dashboard. More on that below

- Simplified UI with more information – our endless pursuit of the best visualization in the industry. This time with traffic lights, tooltips, maps, shortcuts and more data

Read on below for the full list of changes – there are definitely a thing or two (at the very least) that you will find useful.

SSO

We’ve introduced SSO via Okta last release.

In this one we’re also adding support for Azure Active Directory.

Unrelated, or semi-related – from this release and on, API keys can only be regenerated by Account Admin users.

Chrome 107

Google decided with no real warning to change the way getStats() returns its objects, changing all identifiers. This breaks most of the analysis tools we’ve seen (and has broken our analysis as well).

In this release we’ve invested heavily (and urgently) in making sure our getStats() code logic is capable of working with both the “old” stats and the “new” stats and other browsers’ stats.

Not to be left behind, Chrome 108 and 109 are bringing with them more getStats() changes and we’ve been updating our analysis to take them into account as well.

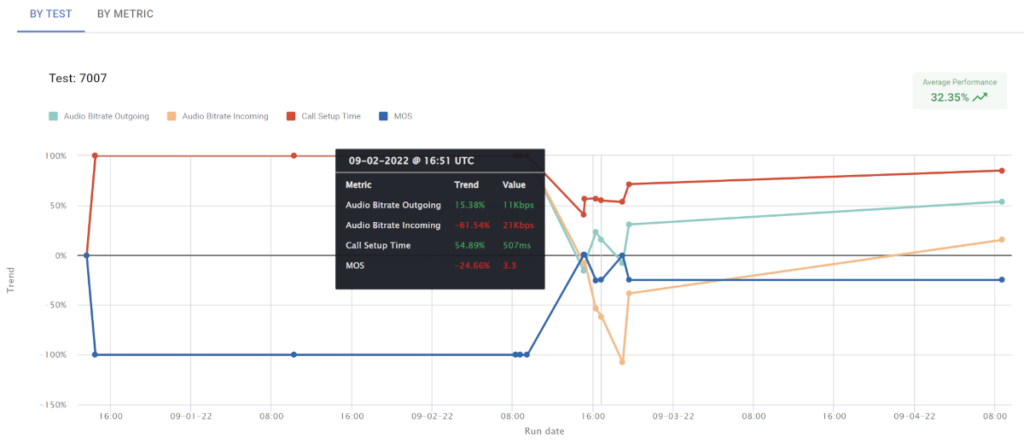

Performance Dashboard

Have you worked on optimizing the media quality of your service? Or wanted to know over time if things are improving or degrading? Now you can easily do this using testingRTC and upRTC.

How does this work? You select the tests (or monitor), pick the metrics you are interested in, and testRTC will do the rest.

The Performance Dashboard looks at the oldest test result, placing that as the baseline and from there calculates the trend for the metrics you’ve picked to look at.

You can view up to 5 different test scripts and 5 different metrics at the same time.

Check it out and let us know if you found it useful.

Analysis

A lot of work is going lately into improving the analysis we do. Here’s what we’ve introduced in this round:

Weighted analysis based on timing

We’ve spent a ton of time in this release working on how we calculate each and every individual value we show the user on practically every level of our test and monitoring results.

The main change we’ve done is to make sure each metric on a channel or probe/peer gets its fair share when averaged up with other channels or probes/rooms. This means for example, that the shorter the channels versus other channels in a probe, the less weight it will have on the calculated result of its metrics for the probe.

This gives more accurate results across the board and makes it easier for you to analyze edge case results.

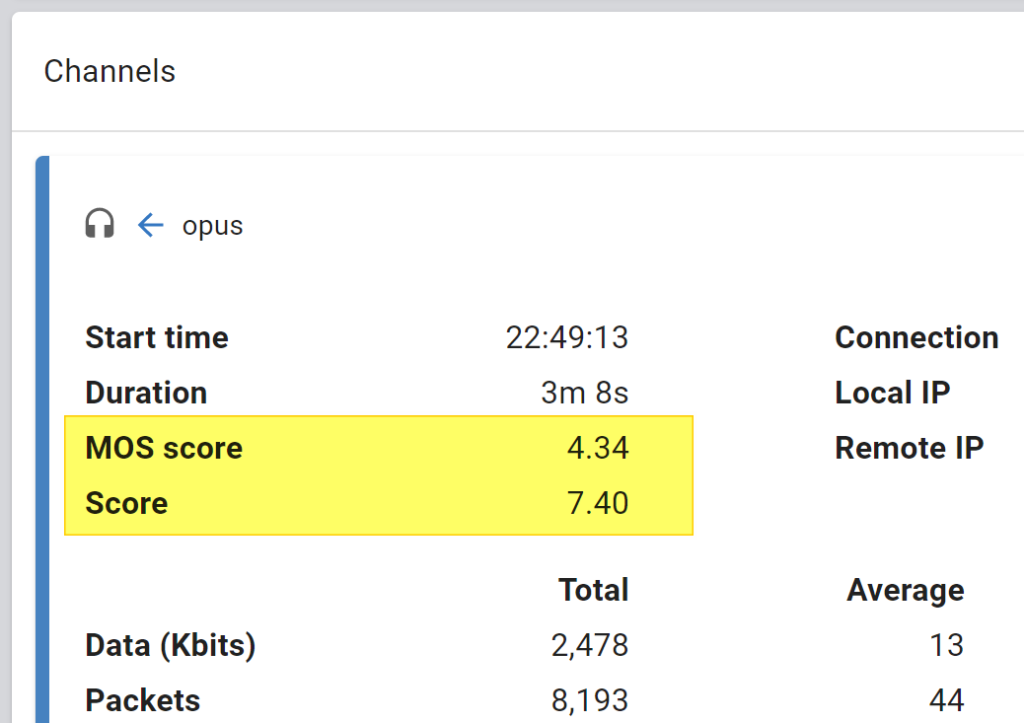

Score calculation

testRTC has two separate quality scores for results: Score and MOS

MOS is for audio and Score takes into account video as well.

Since different applications handle video differently, the weight they give to different media aspects tend to be different from other applications as well.

What we’ve done in this round of changes, was polish our score calculation:

- Score will be 0 if there are no media streams at all for the given probe/peer

- We calculate and show MOS and Score per individual channel

- When calculating the total score of a probe/peer, we can now configure the weight per channel type (incoming/outgoing, audio/video) individually to fit the use case

- And yes, Score now also adheres to the weighted average calculations we are doing everywhere

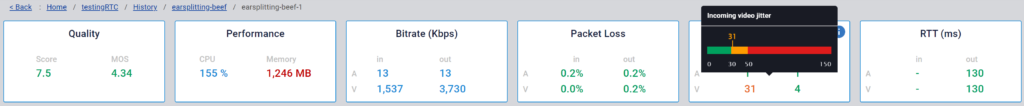

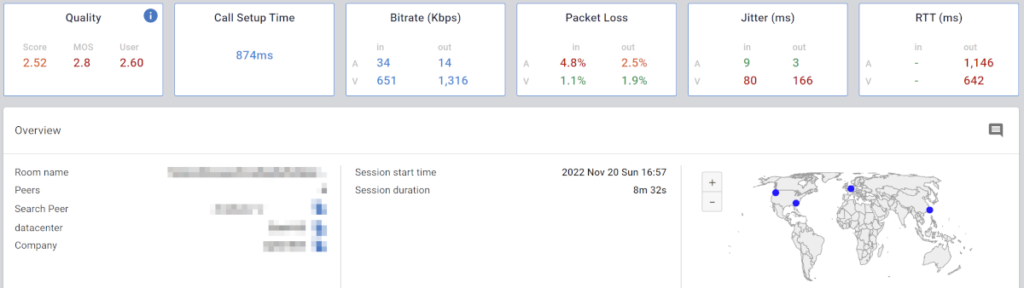

Ribbons and traffic lights

There’s a new traffic lights coloring scheme for ribbon information across testRTC. That includes anywhere where we show high level information at the top of a page.

You already know what I mean – right? 🟢🟡🔴

Green is good. Yellow/orange not so. Red you should check out.

Notice the nice new tooltip that also explains where within the range your value falls?

As we can’t know your exact use case, the thresholds we use can be reconfigured if needed – just reach out to us.

On probe/peer level

- The new Trace window has been polished with a modern design, making it a lot easier to read and navigate

- Audio channels now show their individual calculated MOS score:

In Advanced WebRTC Analytics

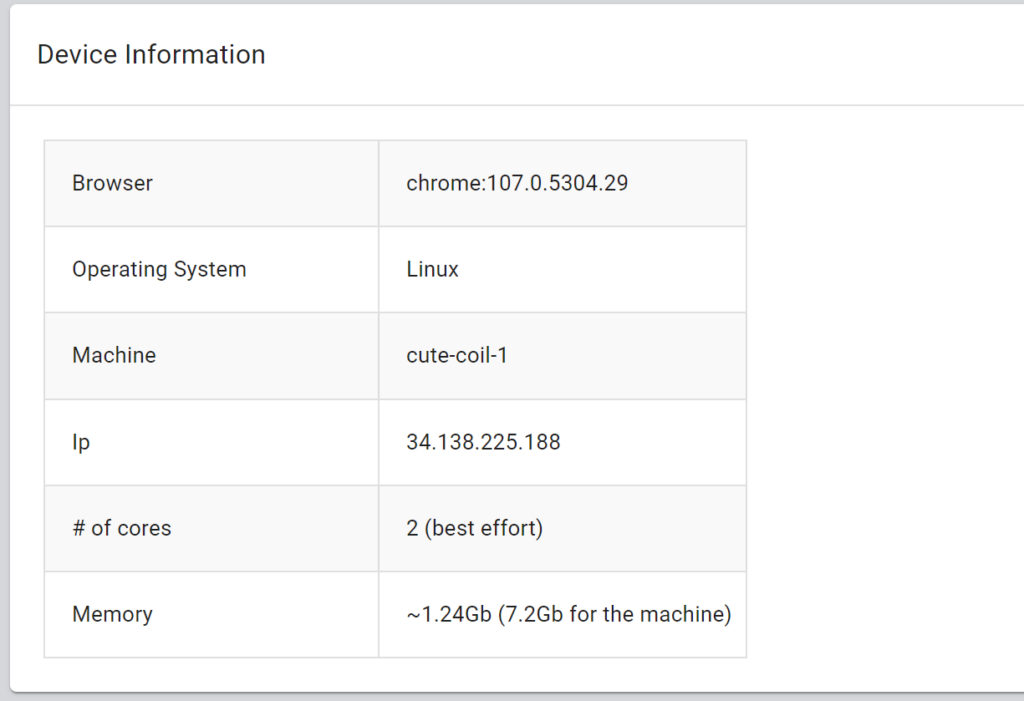

- For testingRTC and upRTC, some of our customers ask about the machines we’re using and their available resources. To make this easier to understand, we’ve added it to the top of the Advanced WebRTC Analytics page:

- Start time and duration of peer connections are now prominently displayed

- The page has been “shortened” hiding areas you’re unlikely to need

- State machines now only show the first few states, allowing to see more if needed

- ICE candidate pairs table now shows only the first few candidates, allowing to see more if needed

- Events log now shows only the first few events, allowing to see more if needed

testingRTC & upRTC

- We’ve upgraded to Nightwatch v2.4.2, so we’re now running on the latest stable version

- Performance dashboard. That new feature explained above? Here’s where to find it

- In testingRTC, just go to testingRTC | Performance in the sidebar. Pick the tests and metrics you want to analyze and you’re good to go. The URL of the page will preserve your selection, so you can share it with others

- In upRTC, just go to the monitor’s configuration. Below the scheduling section, a performance dashboard will appear. You just need to select the metrics you are interested in

- Trace window is now available for testingRTC and upRTC results on the probe level view

qualityRTC

Deeper integrations

For those who are embedding the qualityRTC network testing user-facing page, we’ve added a few new capabilities to make this even more customizable:

- New URL parameters you can use with qualityRTC:

- ?returnurl=<url> Send the user to that URL at the end of a test

- ?hidewidgets=true Removes all widgets from the UI (to use in conjunction with returnurl)

- ?micId=<id>&camId=<id> Forces the use of specific microphone and camera devices during the test instead of the system’s defaults

- You can now use GET /qualityrtc/result/{testid} to get the results of a test executed. The structure returned adheres to the webhook structure

- A PDF of the generated results can be attached to an email sent back to you. This is useful when creating automated tickets in your support system based on these emails. You can learn more about this in our email integration article

- If needed, we can now configure a rotating message during the running test to appear in the popup window

We have a new knowledge base article discussing the deep integration features available in qualityRTC.

Another improvement we’ve made is that now qualityRTC tries to figure out if different IP addresses are used for media and signaling and indicates that in the LOCATION test result.

Bulk export

Both qualityRTC and probeRTC support data streams.

We’ve introduced Data Streams as a feature in our last release. This capability allows our clients to export the data stored in testRTC using JSON files and cloud storage (AWS S3 for now). Our first release of this feature was for watchRTC. In this release, we are bringing data streams to qualityRTC and probeRTC.

Any tests conducted using qualityRTC and probeRTC can now be collected as a data stream of files.

watchRTC

More insights

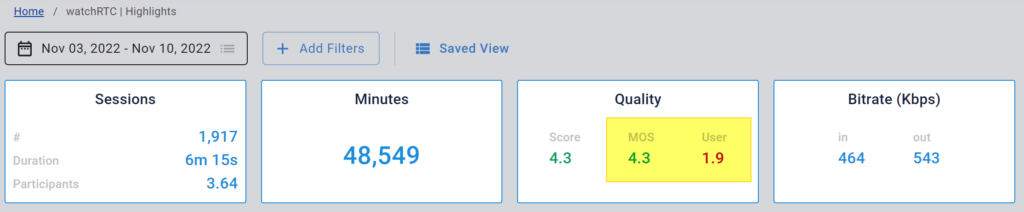

Highlights now show MOS and user ratings and not only Score.

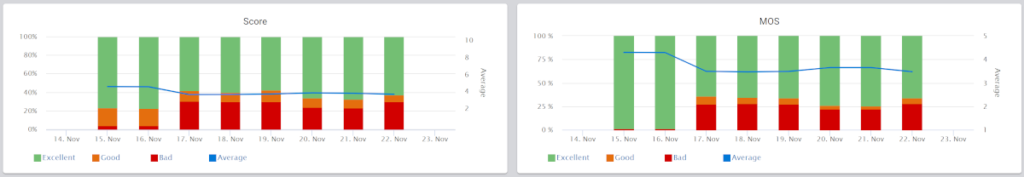

The Trends view now also includes MOS and Score graphs.

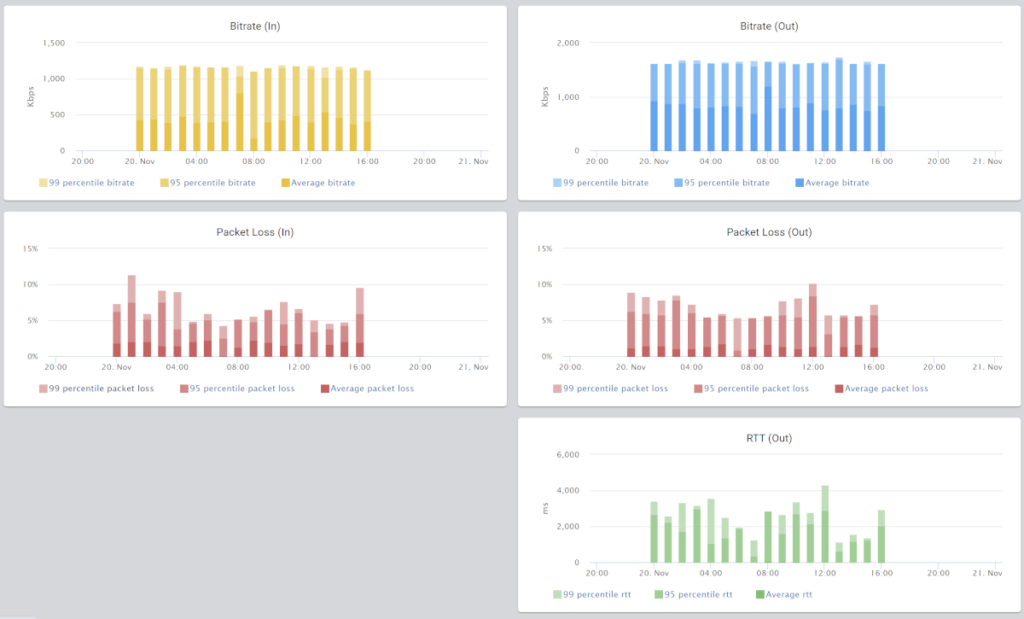

We’ve also split packet loss and RTT graphs into two separate ones – for incoming and outgoing metric values:

Location, location, location

We heard you. You wanted more access to the location information. That of the users as well as the media servers they’re interacting with. So we sprinkled the UI with that information and made it more accessible in many ways.

The room and peer level views now have maps on them, pinpointing the locations of the users and the media servers.

The ISP has been added also to the peer level view as well as added to the data streams exported data.

SDK

We’re introducing a new beta feature: The SDK now exposes the metrics it collects to the application locally. You could get this information directly from WebRTC, but there are times when doing it through the watchRTC SDK is just… better:

- If you are using a third party CPaaS and its SDK doesn’t give you direct access to this data

- When you are using more than a single CPaaS vendor and would like to keep this part of your application clean of its APIs, so as not to hold multiple unaligned versions

- We now have Android and iOS SDKs available in beta. Reach out to our support team to learn more about these

Here and there

- Data streams in watchRTC now include ISP information, end time, audio and video metric total averages

- You can now mark as read/unread all notifications

- Added the ability to make log message of the watchRTC more verbose via a URL parameter (see here)