Testing Firefox has just become easier (and other additions in testRTC)

We’ve pushed a new release for our testRTC service last month. This one has a lot of small polishes along with one large addition – support for Firefox.

I’d like to list some of the things you’ll be able to find in this new release.

Firefox

When we set out to build testRTC, we knew we will need to support multiple browsers. We started off with Chrome (just like most companies building applications with WebRTC), and somehow drilled down into more features, beefing up our execution, automation and analysis capabilities.

We tried adding Firefox about two years ago (and failed). This time, we’re taking it in “baby steps”. This first release of Firefox brings with it solid audio support with rudimentary video support. We aren’t pushing our own video content but rather generating it ad-hoc. This results less effective bitrates that we can reach.

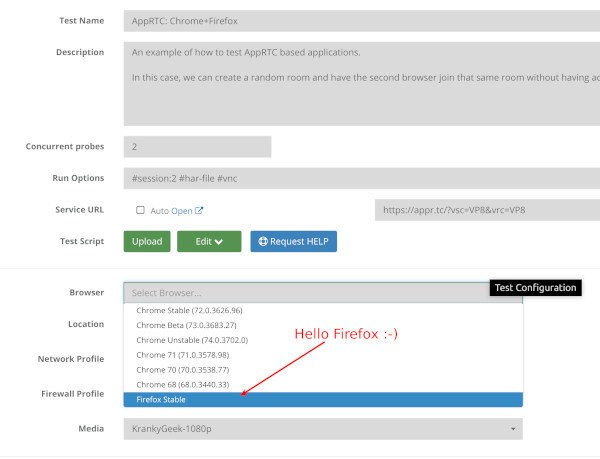

The challenge with Firefox lies in the fact that it has no fake media support the same way Chrome does – there is no simple way to have it take up media files directly instead of the camera. We could theoretically create virtual camera drivers and work our way from there, but that’s exactly where we decided to stop. We wanted to ship something usable before making this a bigger adventure (which was our mistake in the past).

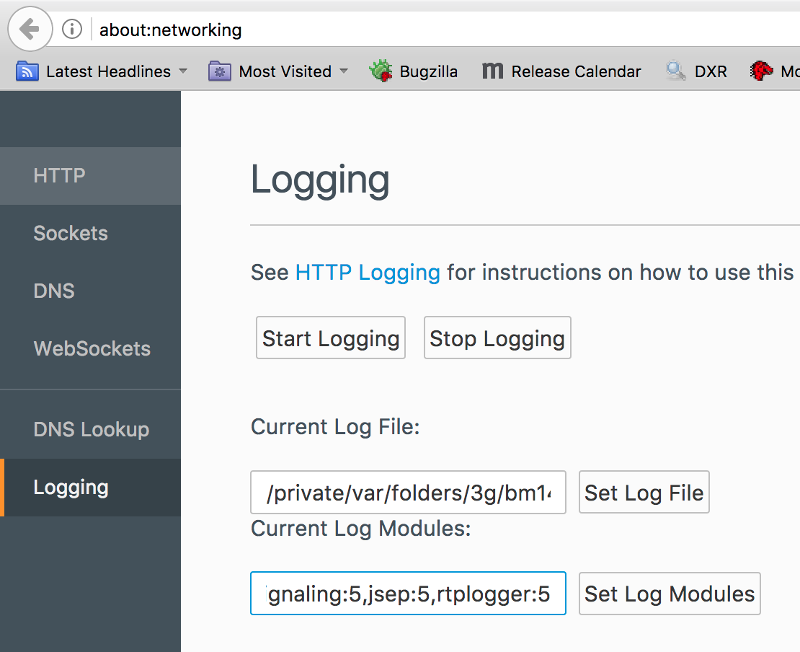

Where will you find Firefox? In the profile planning section under the test editor:

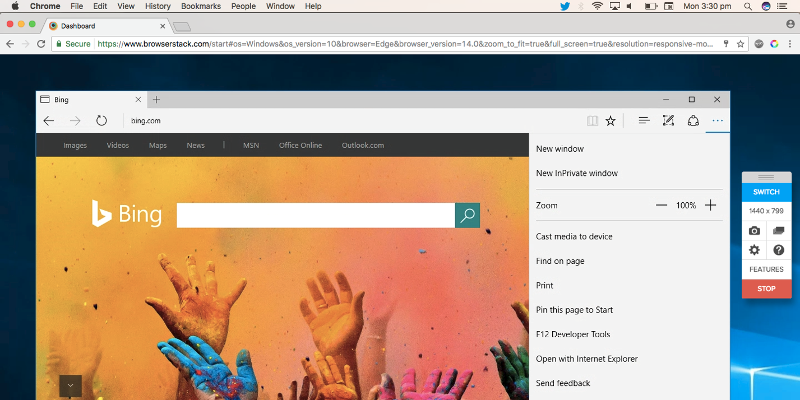

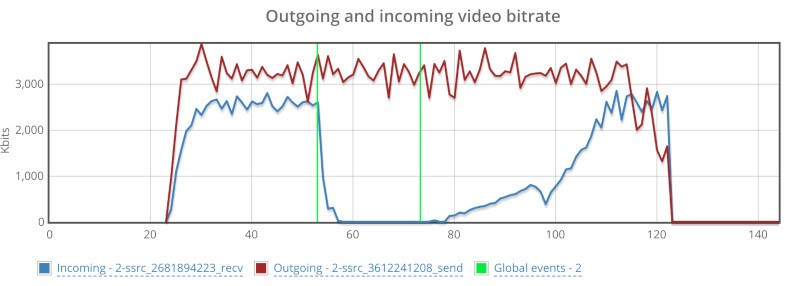

When you run the tests, you might notice that we alternate the colors of the video instead of pushing real video into it. Here’s how it looks like running Jitsi between Firefox and Chrome:

That’s a screenshot we’ve taken inside the test. That cyan color is what we push as the video source from Firefox. This will be improved over time.

On the audio side you can see the metrics properly:

If you need Firefox, then you can now start using testRTC to automate your WebRTC testing on Firefox.

How we count minutes

Up until now, our per minute pricing for tests was built around the notion of a minimum length per test of 10 minutes. If you wanted a test with 4 probes (that’s 4 browsers) concurrently, we calculated it as 4*10=40 minutes even if the test duration was only 3 minutes.

That has now changed. We are calculating the length of tests without any specific minimum. The only things we are doing is:

- Length is rounded up towards the nearest minute. If you had a test that is 2:30 minutes long, we count it as 3 minutes

- We add to the test length our overhead of initiation for the test and teardown. Teardown includes uploading results to our servers and analyzing them. It doesn’t add much for smaller tests, but it can add a few minutes on the larger tests

End result? You can run more tests with the minutes allotted to your account.

This change is automatic across all our existing customers – there’s nothing you need to do to get it.

Monitoring tweaks

We’ve added two new capabilities to monitoring, due to requests of our customers.

#1 – Automated run counter

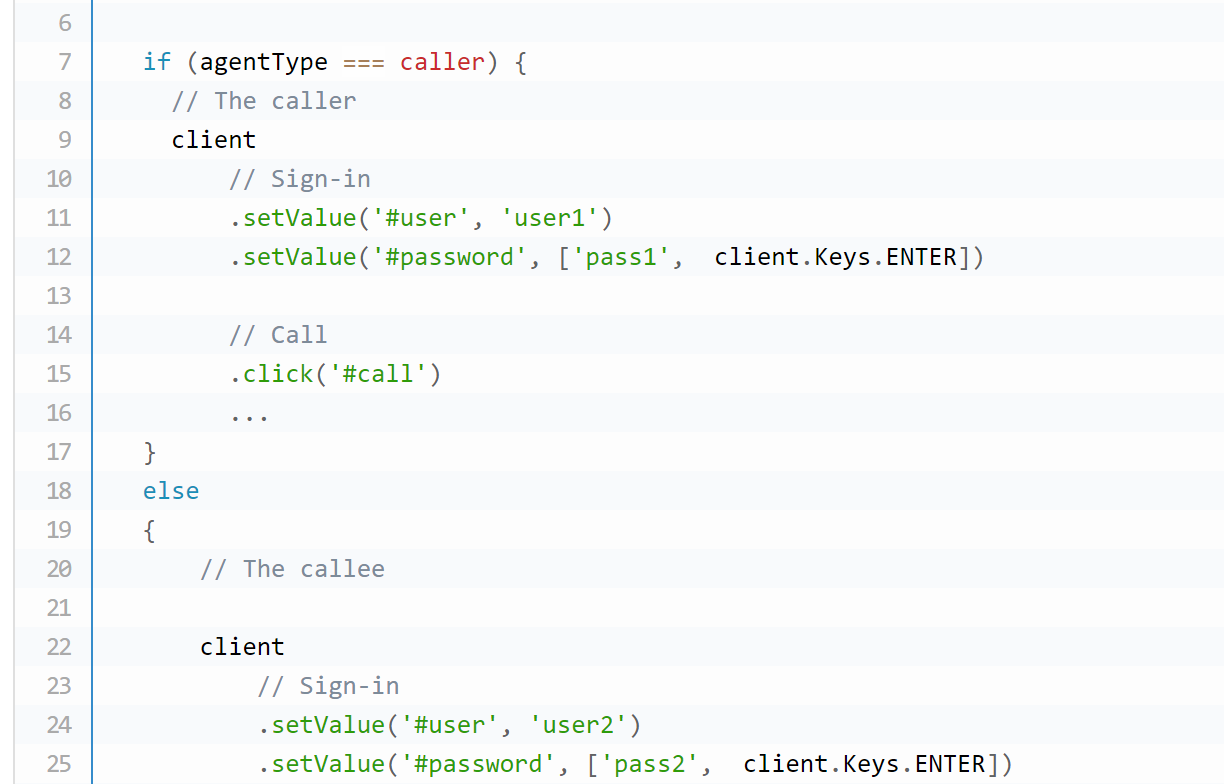

At times, you’ll want to alternate information you use in a test based on when it gets running.

One example is using multiple users to login to a service. If you run a high frequency monitor, which executes a test every 2-5 minutes, using the same user won’t be the right thing to do:

- You might end up not leaving the first session when running the next monitor a couple of minutes later

- Your service might leave session information for longer (webinars tend to do that, waiting for the instructors to join the same session for ten or more minutes after he leaves)

- If a monitor fails, it might cause a transient state for that user until some internal timeout

For these, we tend to suggest clients to use multiple users and alternate between them as they run the monitors.

Another example is when you want in each round of execution to touch a different part of your infrastructure – alternating across your data centers, machines, etc.

Up until today, we’ve used to do this using Firebase as an external database source that knows which user was last used – we even have that in our knowledge base.

While it works well, our purpose is to make the scripts you write shorter and easier to maintain, so we added a new (and simple) environment variable to our tests called RTC_RUN_COUNT. The only thing it does is return the value of an iterator indicating how many times the test has been executed – either as a test or as a monitor.

It is now easy to use by calculating the modulu value of RTC_RUN_COUNT with the number of users you created.

You can learn more about RTC_RUN_COUNT and our other environment variables in our knowledge base.

#2 – Additional information

We had a customer recently who wanted to know within every run of a monitor specific parameters of that run – in his case, it was the part of his infrastructure that gets used during the execution.

He could have used rtcInfo(), but then he’ll need to dig into the logs to find that information, which would take him too long. He needed that when the monitors are running in order to quickly pinpoint the source of failures on his end.

We listened, and added a new script command – rtcSetAdditionalInfo(). Whatever you place in that command during runtime gets stored and “bubbled up” – to the top of test run results pages as well as to the test results webhook. This means that if you connect the monitor to your own monitoring dashboards for the service, you can insert that specific information there, making it easily accessible to your DevOps teams.

Onwards

We will be looking for bugs (and fixing them) around our Firefox implementation, and we’re already hard at work on a totally new product and on some great new analysis features for our test results views.

If you are looking for a solid, managed testing and monitoring solution for your WebRTC application, then try us out.