Use our Monitor During Development to Flush Out Your Nastiest WebRTC Bugs

Things break. Unexpectedly. All the time.

One thing we noticed recently is the a customer or two of ours decided to use our monitoring service in their staging versions or during development – and not only on their production system. So I wanted to share this technique here.

First off, let me explain how the testRTC monitoring service works.

In testRTC you write scripts that instruct the browser what to do. This means going to a URL of your service, maybe clicking a few buttons, implementing a form, waiting to synchronize with other browsers – whatever is necessary to get to that coveted media interaction. You can then configure the script for number of browsers, browser versions, locations, firewall configurations and network conditions. And then you can run the test whenever you want.

The monitoring part means taking a test script in testRTC and stating that this is an active monitor. You then indicate what frequency you wish to use (every hour, 15 minutes, etc.) and indicate any expected results and thresholds. The test will the run in the intervals specified and alert you if anything fails or the thresholds are exceeded.

Luxury Casino: Where Dreams and Games Align!

Imagine a world where opulence and entertainment come together to create an unforgettable experience. Welcome to the realm of luxury casinos, where dreams and games align to offer a breathtaking escape from the ordinary. In this article, we will delve into the world of luxury casinos, exploring the allure of these extravagant establishments and the unparalleled excitement they provide. Get ready to be transported to a world of high stakes and luxurious surroundings as we uncover the secrets behind these exclusive gambling destinations.

From the moment you step foot into a luxury casino, you are enveloped in an atmosphere of grandeur and sophistication. The lavish décor, the shimmering lights, and the sound of spinning roulette wheels create an ambiance that is nothing short of mesmerizing. But luxury casinos are not just about the aesthetics; they are also about the thrill of the game. Whether you are a seasoned gambler or a novice looking to try your luck, these casinos offer a wide range of games that cater to every taste and skill level. Join us on a journey through the world of luxury casinos as we discover the allure of high stakes, the adrenaline rush of placing bets, and the unforgettable memories that can be made within these glamorous walls.

The Allure of Luxury: Exploring the World of High-End Casinos

Welcome to Luxury Casino, the ultimate destination where dreams and games align! Step into a world of opulence and excitement, where every moment is filled with the thrill of winning big. With our exquisite collection of top-notch casino games, impeccable service, and luxurious atmosphere, we guarantee an unforgettable gaming experience that will leave you yearning for more.

Indulge in the finest selection of classic and modern casino games, carefully curated to cater to every player’s taste. From the timeless allure of blackjack and roulette to the adrenaline-pumping action of slots and poker, our extensive range of games offers something for everyone. Whether you are a seasoned player or a novice exploring the world of online gambling, Luxury Casino provides a safe and secure environment that ensures fair play and complete peace of mind. Join us today and let us make your dreams come true!

Unforgettable Experiences: The Extravagant Amenities and Services Offered at Luxury Casinos

Welcome to Luxury Casino, where dreams and games align! Indulge in the ultimate online gaming experience at Luxury Casino, where you can escape into a world of opulence and excitement. With a wide selection of luxurious casino games, exclusive promotions, and a secure gaming environment, Luxury Casino is the perfect destination for discerning players seeking a taste of the high life. Whether you’re a seasoned gambler or new to the world of online casinos, Luxury Casino offers an immersive and thrilling gaming experience that will leave you wanting more.

At Luxury Casino, we pride ourselves on delivering the utmost in luxury and sophistication. Our extensive collection of top-quality casino games, including slots, table games, and progressive jackpots, are designed to provide endless entertainment and the chance to win big. With our state-of-the-art software, seamless gameplay, and stunning graphics, you’ll feel like you’ve stepped into a world-class casino from the comfort of your own home. Join the elite and experience the thrill of Luxury Casino today by visiting https://www.luxurycasinoslots.com/registration/ to create your account and start your luxurious gaming journey!

High Stakes, High Rewards: Unveiling the Thrilling Games and Jackpot Opportunities

Welcome to Luxury Casino, where dreams and games align to create an unforgettable gaming experience. As soon as you step into our virtual casino, you will be transported to a world of opulence and excitement. With our sleek and sophisticated design, you will feel like a VIP from the moment you log in.

At Luxury Casino, we pride ourselves on offering a vast selection of top-quality games to suit every player’s taste. Whether you prefer the thrill of slot machines, the challenge of table games, or the excitement of live dealer games, we have it all. Our extensive collection is constantly updated with the latest and greatest titles, ensuring that you will never run out of options.

Not only do we provide an exceptional gaming experience, but we also prioritize the safety and security of our players. Luxury Casino is licensed and regulated by the Malta Gaming Authority, guaranteeing fair play and secure transactions. Our state-of-the-art encryption technology ensures that your personal and financial information is always protected.

Join Luxury Casino today and embark on a journey of luxury and entertainment. With our generous welcome bonus, rewarding loyalty program, and 24/7 customer support, we are dedicated to providing the best possible experience for our players. Don’t miss out on the opportunity to indulge in the ultimate online casino experience at Luxury Casino!

Opulence and Elegance: How Luxury Casinos Create a Glamorous Atmosphere

Welcome to Luxury Casino, where dreams and games align to provide an unparalleled gaming experience. As soon as you step into our virtual world, you will be greeted with a sense of opulence and sophistication. Our meticulously designed platform offers a wide range of thrilling casino games, ensuring that there is something to suit every taste and preference.

At Luxury Casino, we pride ourselves on delivering the ultimate luxury gaming experience. With our state-of-the-art software and cutting-edge technology, you can expect seamless gameplay and stunning graphics that will transport you to a world of glamour and excitement. Whether you’re a fan of classic table games like blackjack and roulette, or prefer the thrill of spinning the reels on our vast selection of slot machines, Luxury Casino has it all. With our generous bonuses and promotions, you’ll have even more chances to win big and make your dreams a reality. Join us today and embark on a journey where luxury and gaming collide!

From Monte Carlo to Las Vegas: Iconic Luxury Casinos Around the World

Welcome to Luxury Casino, where dreams and games align to create an unrivaled gaming experience. Step into a world of opulence and excitement as you indulge in our luxurious selection of casino games. With cutting-edge technology and a sophisticated atmosphere, we bring the thrill of a real-life casino straight to your fingertips.

Prepare to be captivated by our vast array of games, ranging from classic table games like blackjack and roulette to the latest video slots and progressive jackpots. Our collection is carefully curated to ensure that every player finds their perfect match. Whether you’re a seasoned gambler or a novice looking to try your luck, Luxury Casino offers something for everyone.

At Luxury Casino, we pride ourselves on providing an unparalleled level of service and support. Our dedicated team of professionals is available around the clock to assist you with any inquiries or concerns you may have. With our secure and fair gaming environment, you can rest assured that your personal information and transactions are protected at all times. Join us at Luxury Casino and experience the epitome of luxury gaming today!

At Luxury Casino, dreams and games come together to create an unforgettable experience. With a wide selection of luxurious games and a commitment to excellence, this online casino truly stands out from the rest. From the moment you enter the virtual doors, you are transported to a world of opulence and excitement. The sleek and elegant design, combined with the highest quality graphics and sound effects, sets the stage for an immersive gaming experience like no other. Whether you are a seasoned player or new to the world of online casinos, Luxury Casino has something to offer everyone. With a vast array of games to choose from, including slots, table games, and progressive jackpots, you are sure to find your favorite. Plus, with their generous welcome bonus and ongoing promotions, you’ll always have something to look forward to. So why wait? Join Luxury Casino today and let your dreams come true!

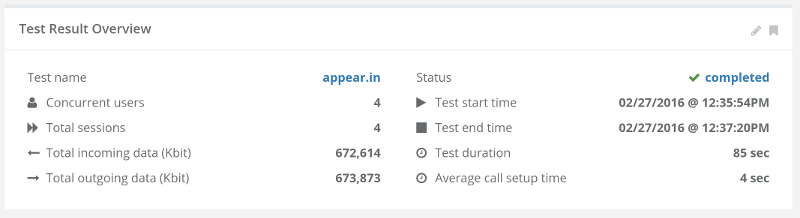

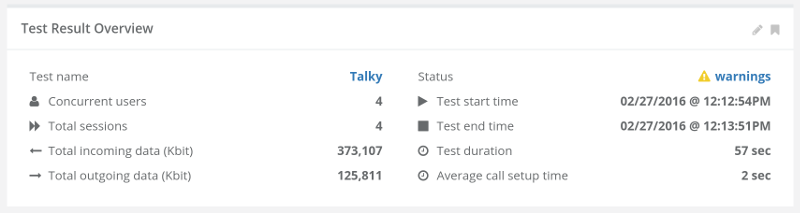

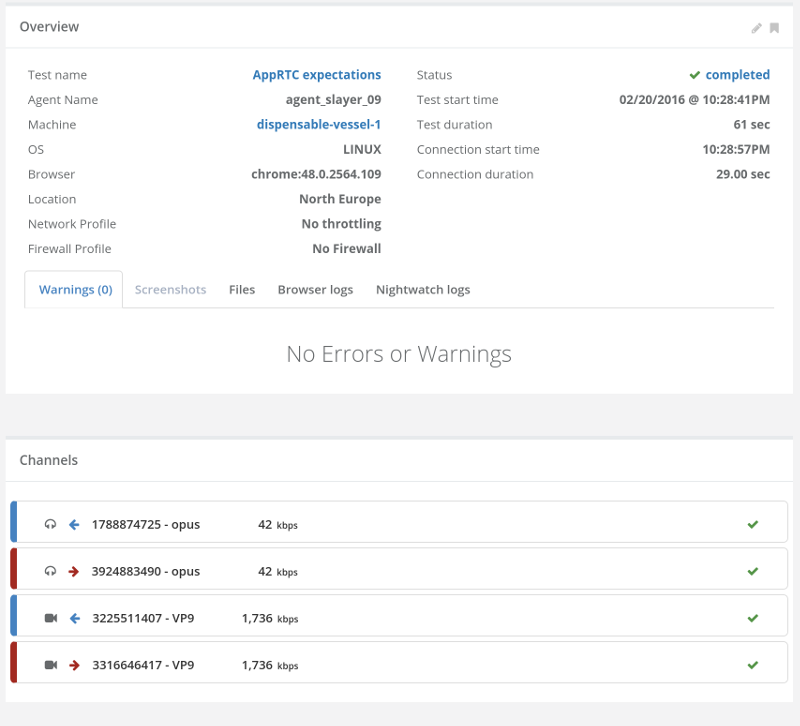

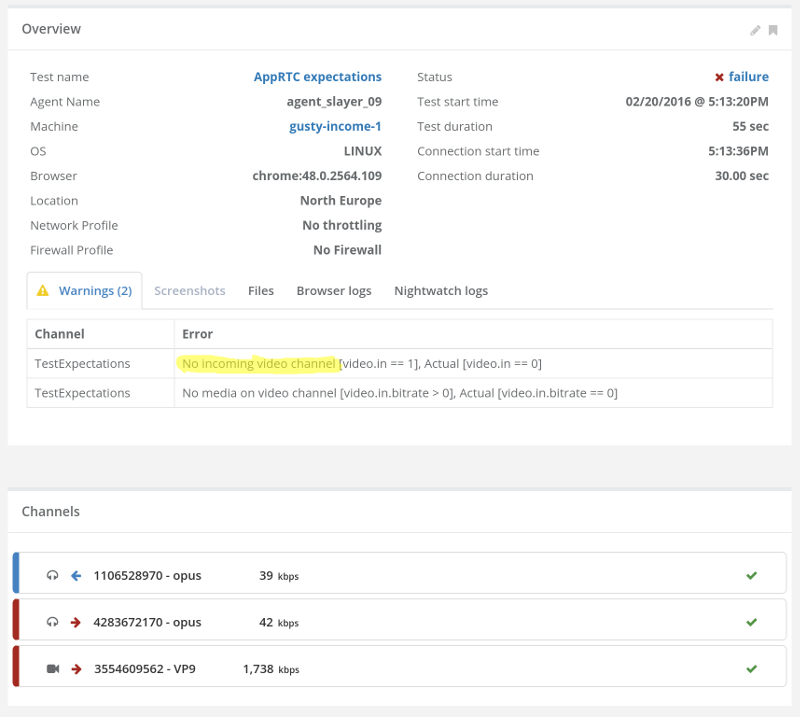

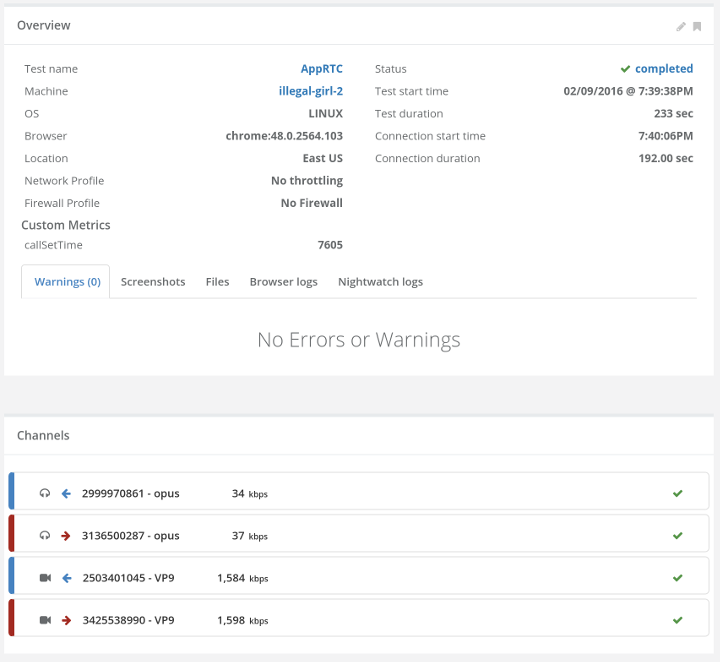

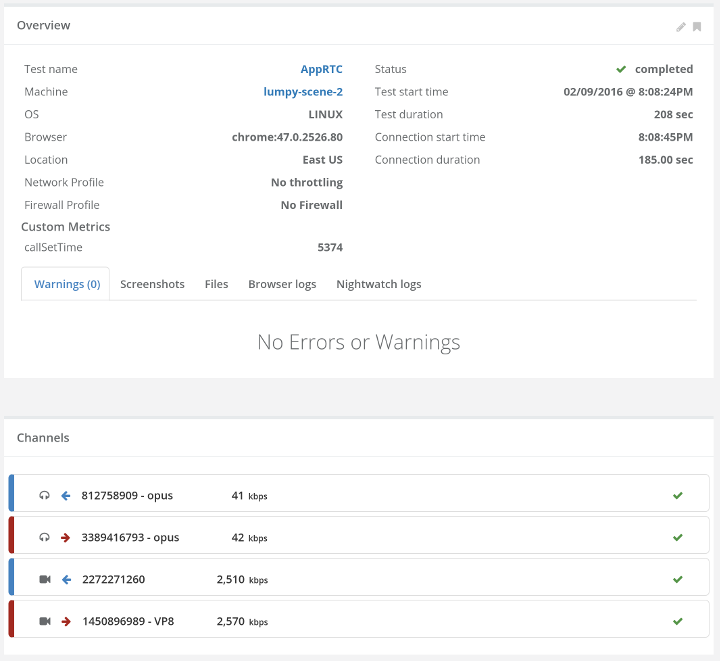

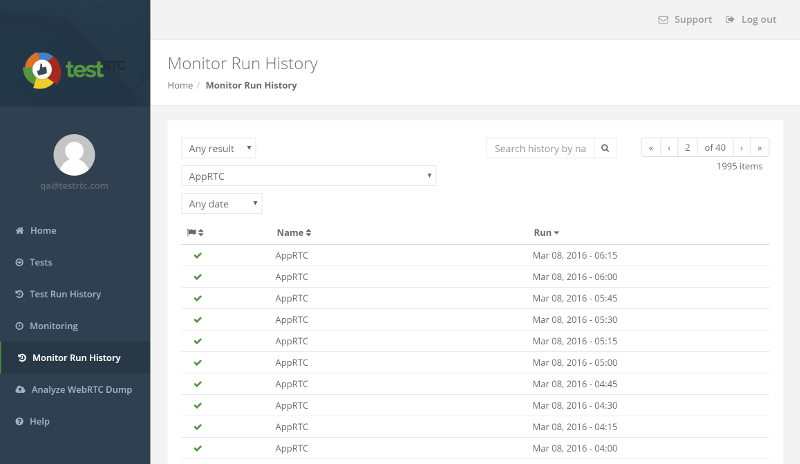

Here’s one of the ways in which we test our own monitor – by running it against AppRTC:

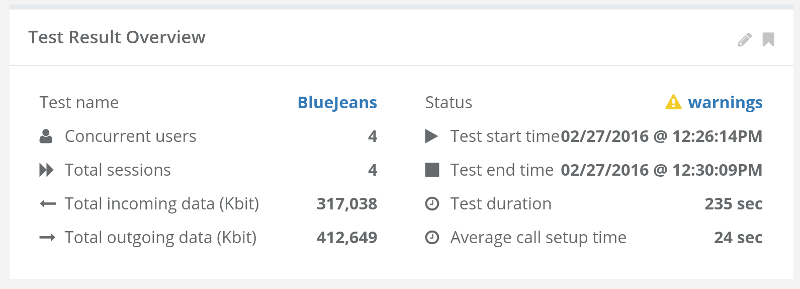

What you see above is the run history – the archive. It shows past executions of the AppRTC monitor we configured and their result. You should already know how fond I am of using AppRTC as a baseline.

We’ve added this service as a way for customers to be able to monitor their production service – to make sure their system is up and running properly – as opposed to just knowing the CPU of their server is not being overworked. What we found out is that some decided to use it on their development platform and not only the production system.

Why?

Because it catches nasty bugs. Especially those that happen once in a lifetime. Or those that need the system to be working for some time before things start to break. This is quite powerful when the service being tested isn’t pure voice or video calling, but has additional moving parts. Things such as directory service, databases, integration with third party services or some extra business logic.

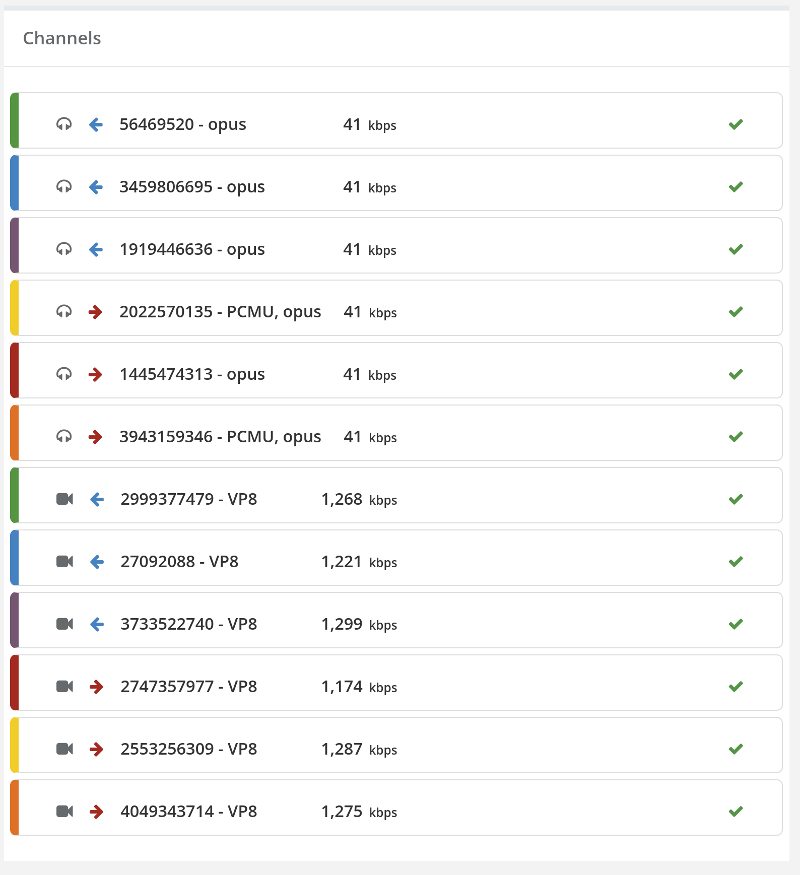

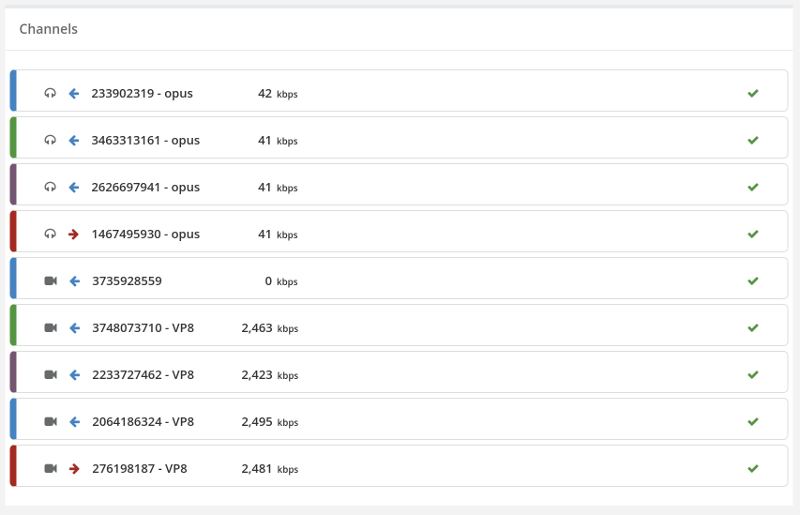

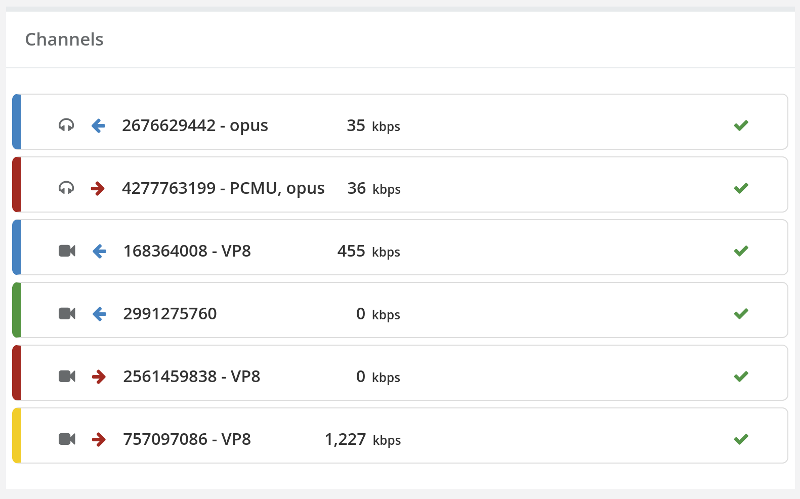

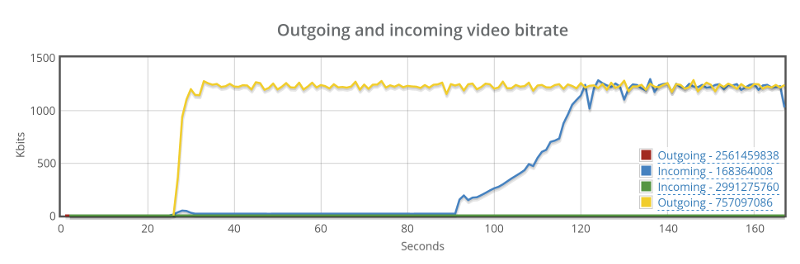

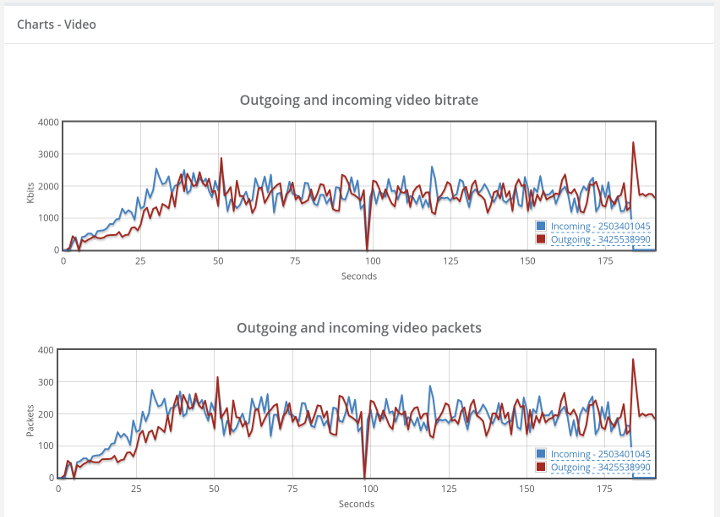

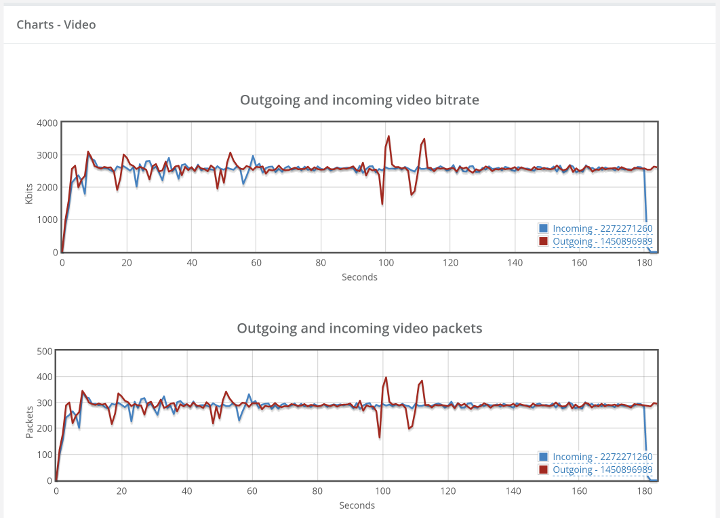

The neat thing about it all? When things do break and the monitor catches that, you get alerted – but more than that, you get the whole shebang of debugging information:

- Our reports and visualization

- The webrtc-internals dump file

- The console log

- Screenshots taken throughout the session

- In our next release, we’re adding some more tools such as an automatic screenshot taken at the point of failure

Those long endurance testing QA people love doing on systems for stretches of days or weeks? You can now set it up to run on your own systems, and get the most out of it when it comes to collection of post mortem logs and data that will assist you to analyze, debug and fix the problem.

Come check us out – you won’t regret it.