If you are developing WebRTC applications that target large scale events – think hundreds of users in a single “room”, then you should continue reading.

LiveSwitch Cloud by Frozen Mountain is a modern CPaaS offering focused around video communications. Naturally it makes use of WebRTC and relies on the long heritage and capabilities of Frozen Mountain in this space. Frozen Mountain has transitioned from a vendor that specializes in SDKs and media servers you can host on your own to providing also their managed cloud service. In essence, dogfooding their technology.

One of the strong markets that Frozen Mountain operates in is the entertainment industry, where large scale online virtual events are becoming the norm. A recent such testRTC client used our WebRTC stress testing capabilities to validate their scenario prior to a large event.

This client’s scenario included segmenting the audience of a live event into groups of 25 viewers that could easily be monitored by producers in a studio control room and displayed to performers as a virtual audience that they could see, hear, and interact with during the event. We settled on 36 such segments, totalling 900 viewers in this WebRTC stress test.

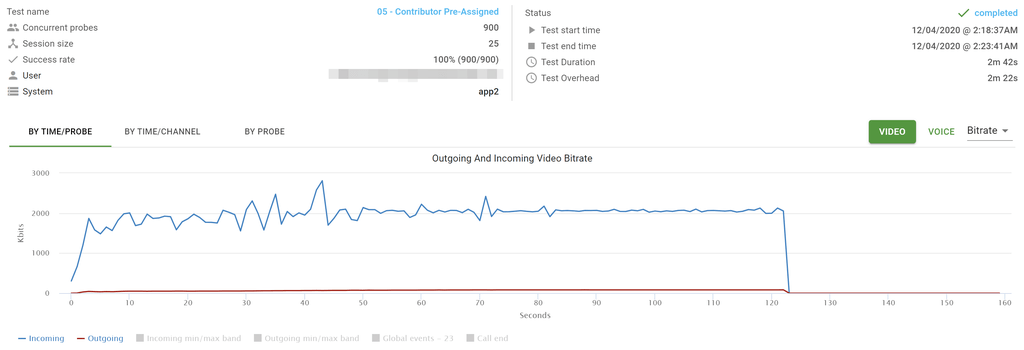

Here is a sample test run from the work done:

The graph above shows the 900 WebRTC probes that were used in one of these tests. The blue line denotes the incoming average bitrate over time of the main event as seen by each of the viewers. The redline is the outgoing bitrate. Since these viewers are used to convey an atmosphere to the event, there was no need to have them stream high bitrates – having 900 of them meant a lot of pixels in aggregate even at their low bitrate. You can see how the incoming bitrate stabilizes at around 2mbps for all the viewers.

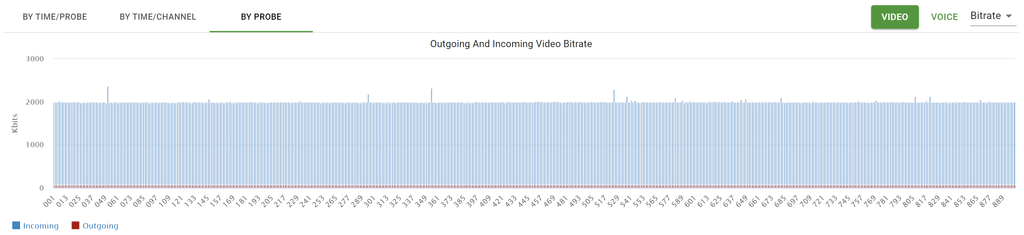

This graph shows for each individual probe out of the 900 WebRTC browsers that we had what was the average bitrate they had throughout the test. It is a slightly different view towards the same data that is meant to find outliers.

There are slight variations to a few of the probes there, which shows a stable system overall.

What was great about this one, is the additional work Frozen Mountain did on their end: The viewers were split into segments that had to be filled randomly, as they would in real life. Each user joining in, coming in at his own pace, as opposed to packing the segments one after the other with people like automatons.

The above animation was created by Frozen Mountain to illustrate the audience. Each square is a user, and each segment/pool has 25 users in it. You can see how the 900 probes from testRTC randomly fill out the audience to capacity.

Testing for live WebRTC events at scale

We are seeing a different approach to testing recently.

As we are shifting from nice-to-have and proof-of-concepts to production systems, there is a bigger need to thoroughly test the performance and scale of WebRTC applications. This is doubly true for large events. Ones that are broadcasted live to audiences. Such events take place in two different industries: entertainment and enterprise.

Within the entertainment industry, it is about working alongside the pandemic. Being able to bring the audiences back to the stadiums and theatre halls, alas remotely. With enterprises it is a lot about virtual town halls, sales kickoffs and corporate team building where everyone is sheltered at home.

In both these industries the cost of a mistake is high since there is no second chance. You can’t really rerun that same match or reschedule that town hall. Especially not with so many people and planning involved to make this event happen.

End-to-end stress testing is an important milestone here. While media server frameworks and CPaaS vendors do their own testing, such solutions need to be tested end-to-end for scale. Bottlenecks can occur anywhere in the system and the only real way to find these bottlenecks is through rigorous stress testing.

Being able to create a test environment quickly and scale it to full capacity is paramount for the success of the platform used for such events, and it is where a lot of our efforts have been going to these recent months, as we see more vendors approaching us to help them with these challenges.

What we did on our end was solve some bottlenecks in our infrastructure that “held us back” and enabled us to assist our clients only up to 2,000 probes in a single test. We can now do more of it and with higher flexibility.