0

WebRTC Application Monitoring: Do you Wipe or Wash?

UPDATE: Recording of this webinar can be found here.

If you are running an application then you are most probably monitoring it already.

You’ve got New Relic, Datadog or some other cloud service or on premise monitoring setup handling your APM (Application Performance Management).

What does that mean exactly with WebRTC?

If we do the math, you’ve got the following servers to worry about:

- STUN/TURN servers, deployed in one or more (probably more) data centers

- Signaling server, at least one. Maybe more when you scale the service up

- Web server, where you actually host your application and its HTML pages

- Media servers, optionally, you’ll have media servers to handle recording or group calls (look at our Kurento sizing article for some examples)

- Database, while you might not have this, most services do, so that’s another set of headaches

- Load balancers, distributed memory datagrid (call this redis), etc.

Lots and lots of servers in that backend of yours. I like to think of them as moving parts. Every additional server that you add. Every new type of server you introduce. It adds a moving part. Another system that can fail. Another system that needs to be maintained and monitored.

WebRTC is a very generous technology when it comes to the variety of servers it needs to run in production.

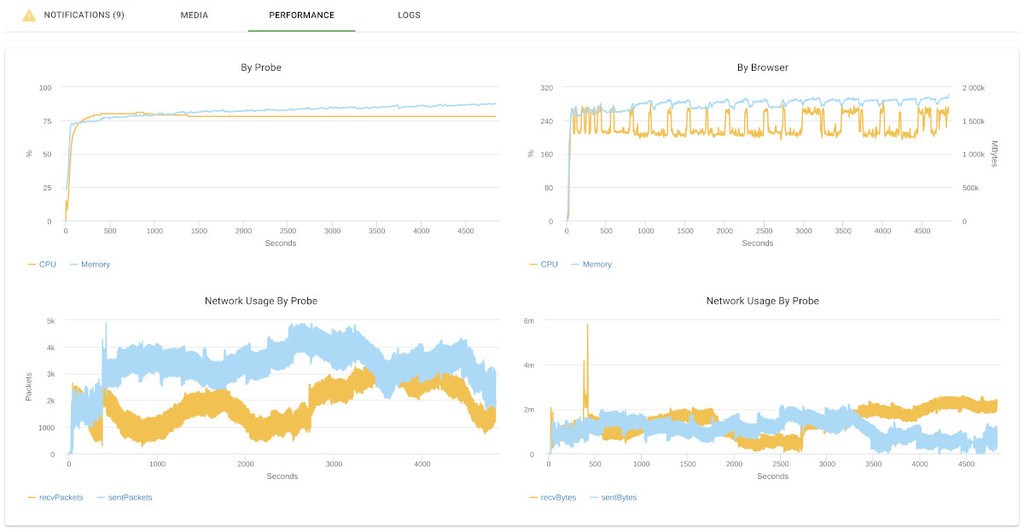

Assuming you’re doing application monitoring on these servers, you are collecting all machine characteristics. CPU use, bandwidth, memory, storage. For the various servers you can go further and collect specific application metrics.

Is that enough? Aren’t you missing something?

Here are 4 quick stories we’ve heard in the last year.

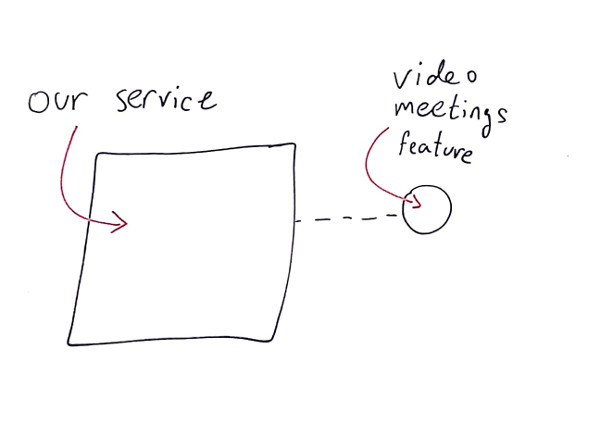

#1 – That Video Chat Feature? It Is Broken

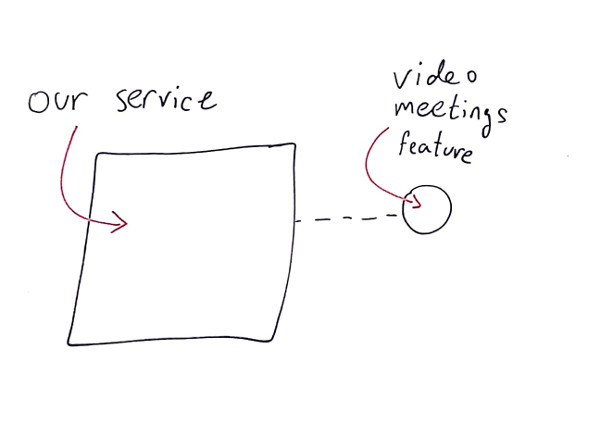

We’re still figuring out this whole embeddable communications trend. The idea of companies taking WebRTC and shoving voice and video calling capabilities into an existing product and workflow. It can be project management tools, doctor visitations, meeting scheduler, etc.

In some cases, the interactions via WebRTC are an experiment of sorts. A decision to attempt embedding communications directly to the existing product instead of having users find how to communicate directly (phone calls and Skype were the most common alternatives).

Treated as an experiment, such integrations sometimes were taken somewhat out of focus, and the development teams rushed to handle other tasks within the core product, as so often happens.

In one such case, the company used a CPaaS vendor to get that capability integrated with their service, so they didn’t think much about monitoring it.

At least not until they found out one day that their video meetings feature was malfunctioning for over two weeks (!). Customers tried using it and failed and just moved on, until someone complained loud enough.

The problem ended up being the use of deprecated CPaaS SDK that had to be upgraded and wasn’t.

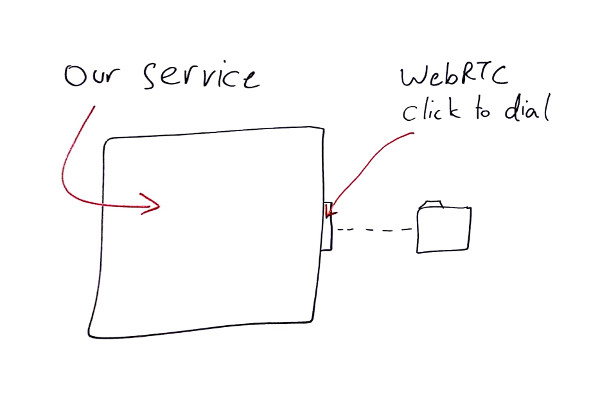

#2 – But Our Service is Working. Just not the Web Calling Part

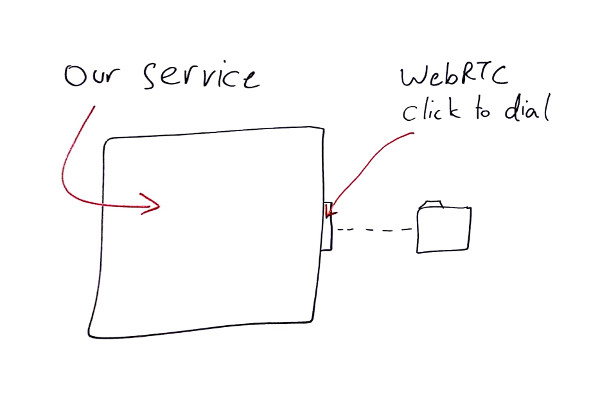

In many cases, there’s an existing communication product that does most of its “dealings” over PSTN and regular phone numbers. Then one day, someone decides to add browser dialing. Next thing that happens, you’ve got a core product doing communications with a new WebRTC-based feature in there.

Things are great and calls are being made. Until one day a customer calls to complain. He embedded a call button to his website, but people stopped calling him from the site. This has gone for a couple of days while he tried tweaking his business and trying to figure out what’s wrong. Until finding out that the click to call button on the website just doesn’t work anymore.

Again, all the monitoring and health check metrics were fine, but the integration point of WebRTC to the rest of the system was somewhat lost.

The challenge here was that this got caught by a customer who was paying for the service. What the company wanted to do at that point is to make sure this doesn’t repeat itself. They wanted to know about their integration issues before their customers do.

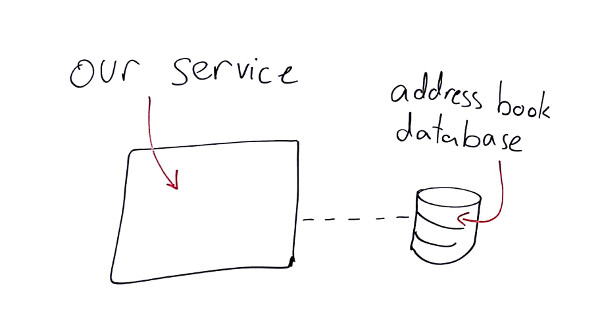

#3 – Where’s My Database When I Need it?

Here’s another one. A customer of ours has this hosted unified communications service that runs from the browser. You login with your credentials, see a contacts list and can dial anyone or receive calls right inside the browser.

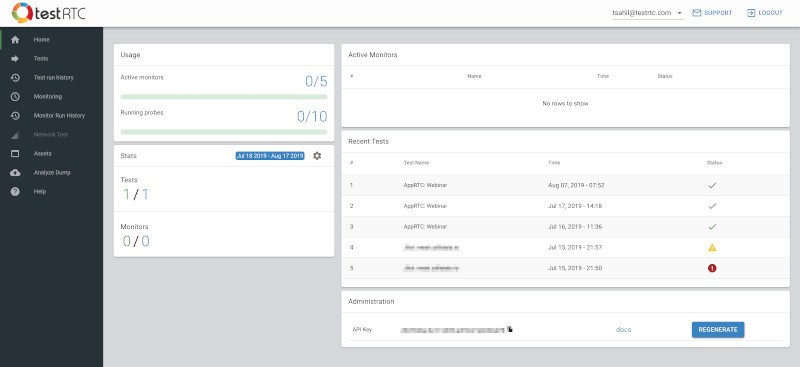

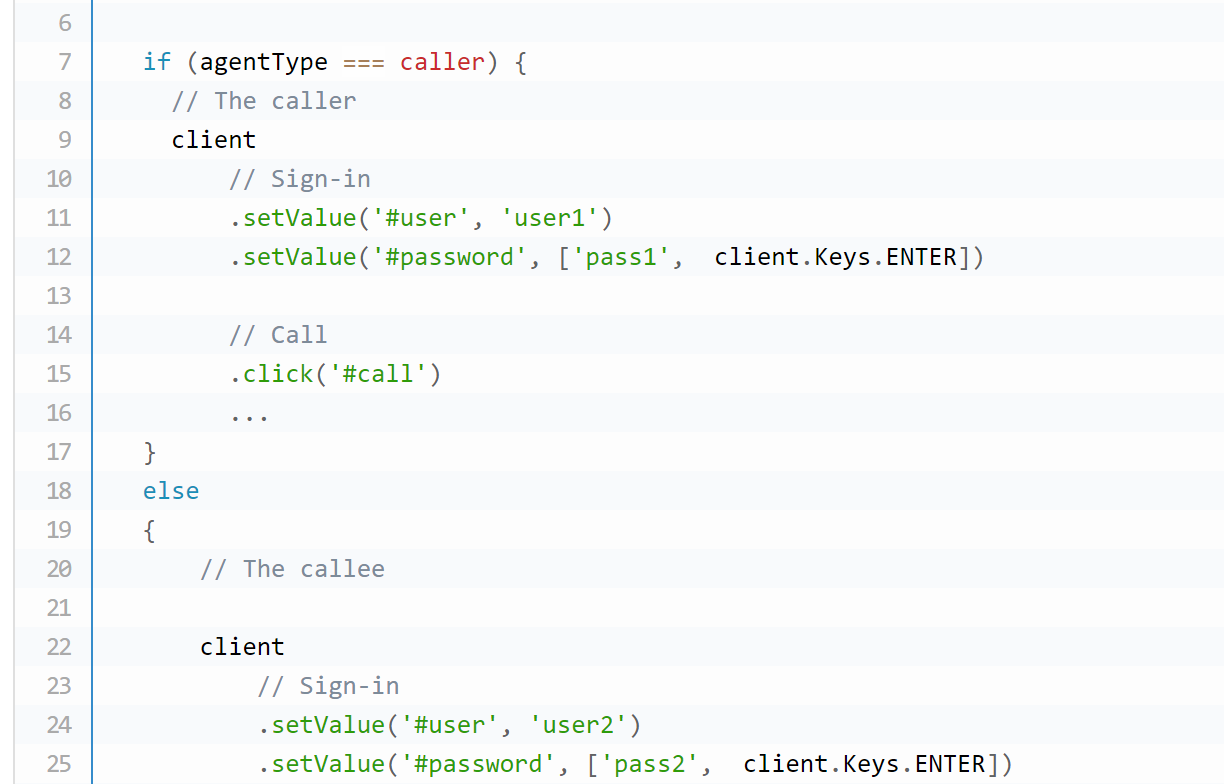

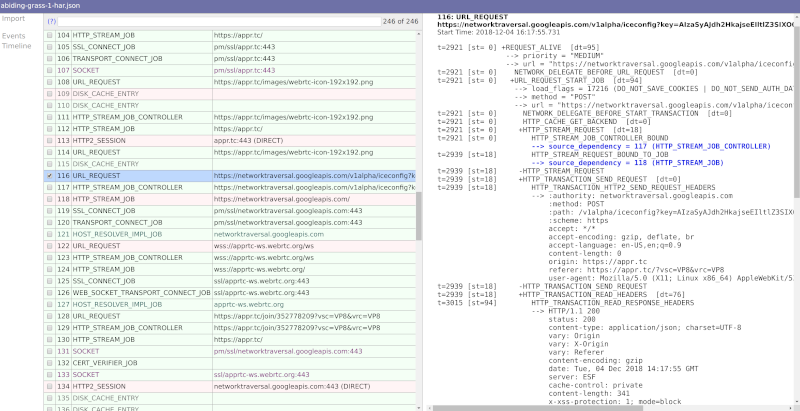

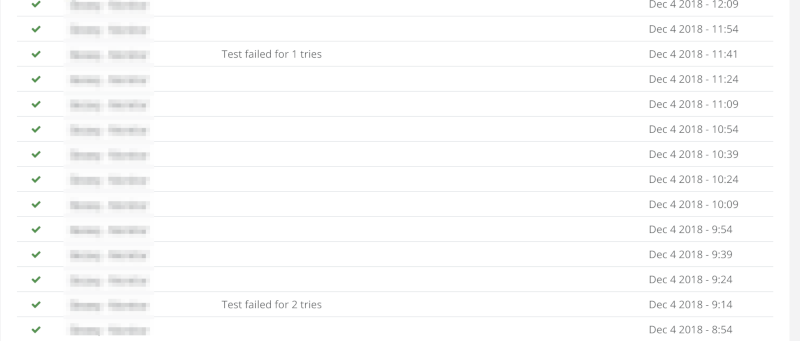

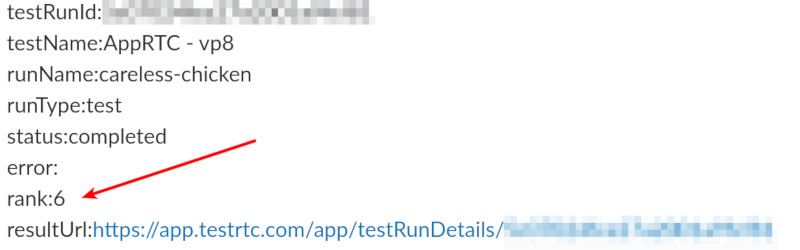

They decided to create a monitor with us that runs at a low frequency doing the exact same thing: two people logging in, one calls and the other answers. Checking that there’s audio and video and all is well.

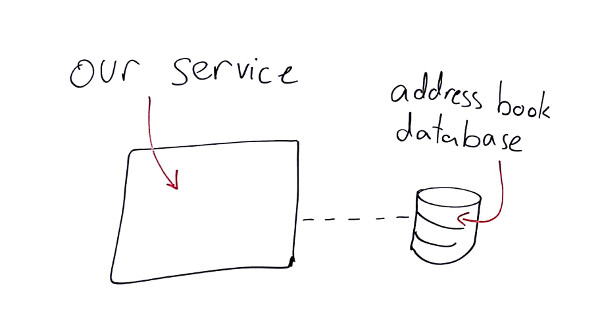

One time they contacted us complaining that our monitor is failing while they know their system is up and running. So we opened up a failed monitor run, looked at the screenshot we collect automatically upon failure and saw an error on the screen – the browser just couldn’t get the address book of the user after logging in.

This had nothing to do with WebRTC. It was a faulty connection to the database, but it ended up killing the service. They got that pinpointed and resolved after a couple of iterations. For them, it was all about the end-to-end experience and making sure it works properly.

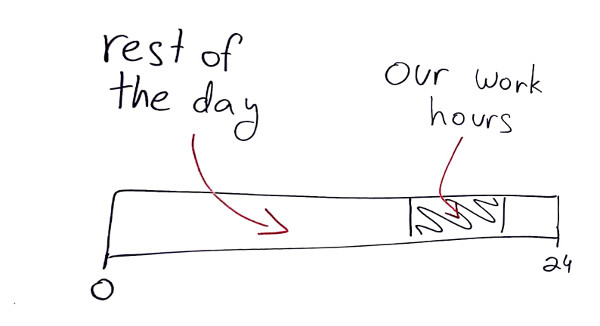

#4 – The Doctor Won’t See You Now

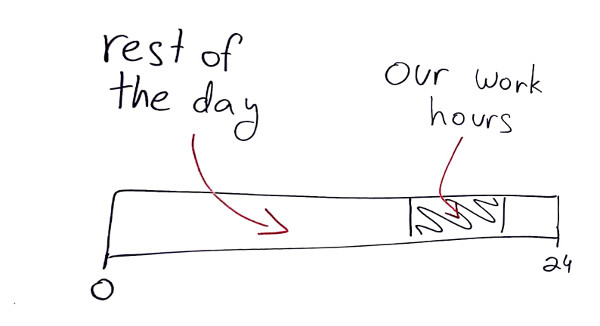

Healthcare is another interesting area for us. We’ve got customers in this space doing both testing and monitoring. The interesting thing about healthcare is that doctor visitations aren’t a 24/7 thing. For that particular customer it was a 3-hour day shift.

The service was operating outside of the normal working hours of the doctor’s office, with the idea of offering patients a way to get a doctor during the evening hours.

With a service running only part of the day, the company wanted to be certain that the service is up and running properly – and know about it as early on as possible to be able to resolve any issues prior to the doctors starting their shift.

End-to-End Monitoring to the Rescue

In all of these cases, the servers were up and running. The machines were humming along, but the service itself was broken. Why? Because application metrics tell a story, but not the whole story. For that, you need end-to-end monitoring. You need a way to run a real session through the system to validate that all of its pieces – all of its moving parts – are working well TOGETHER.

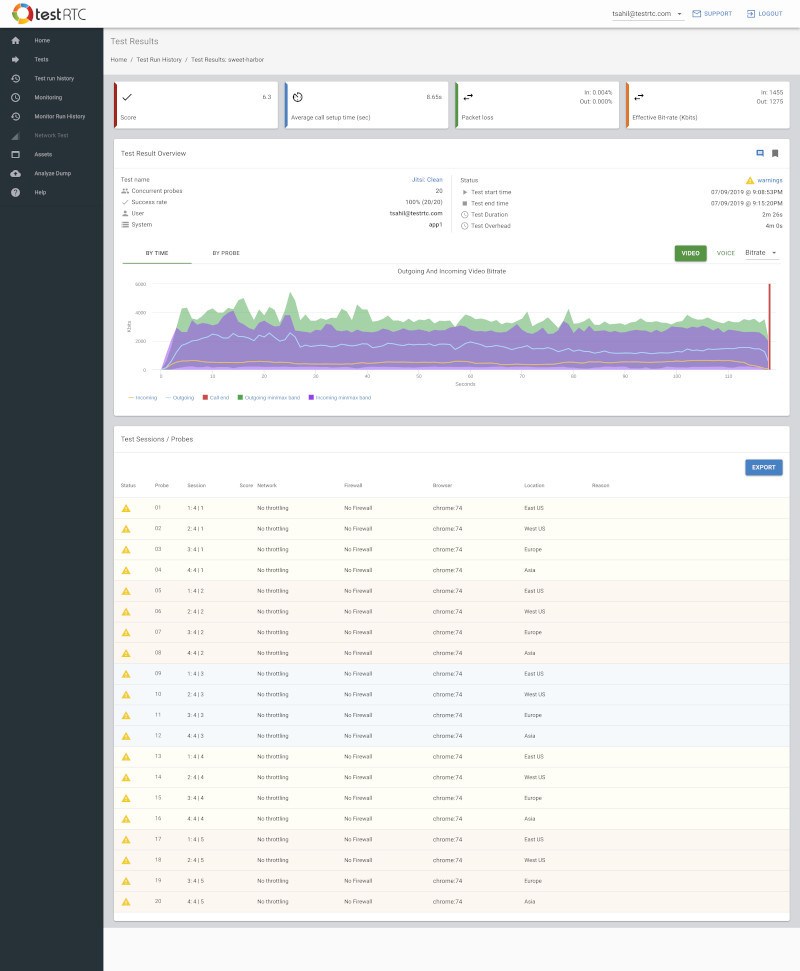

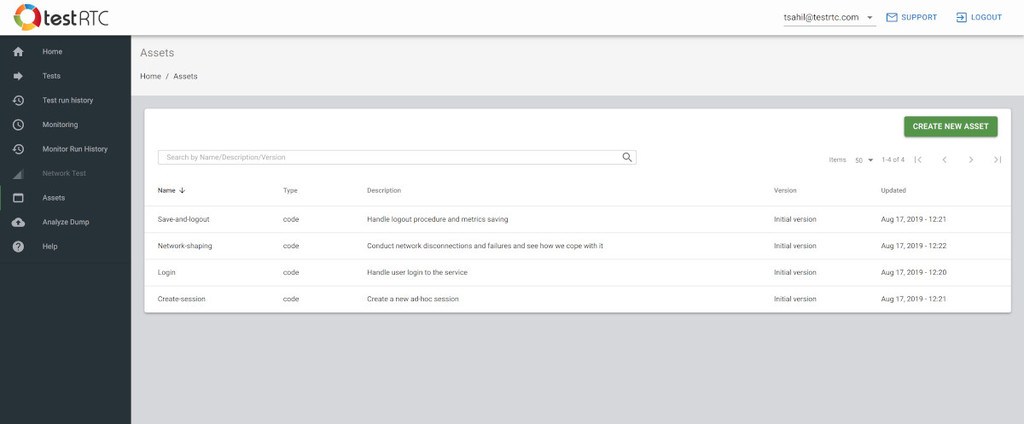

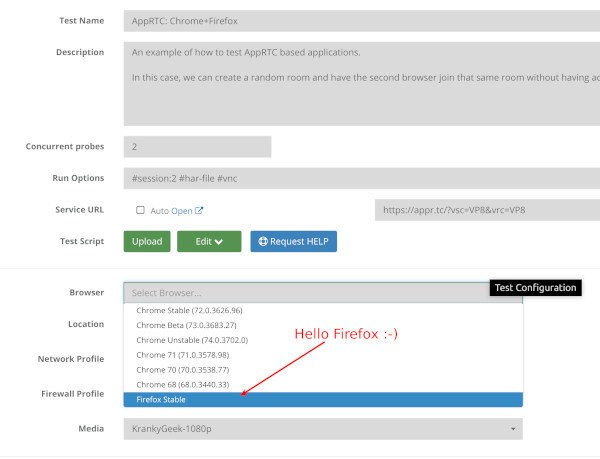

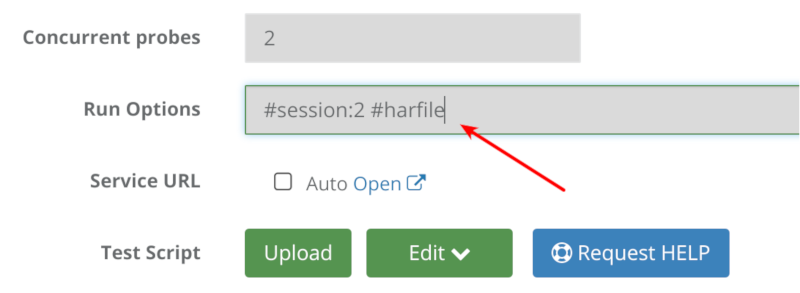

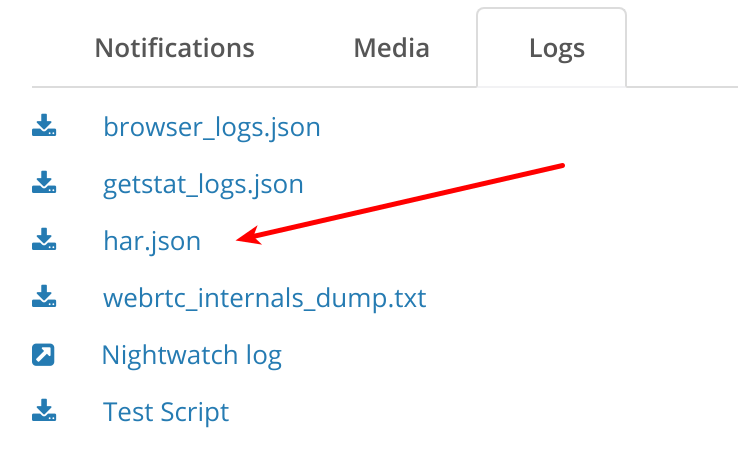

Next week, we will be hosting a webinar. In this webinar, we will show step by step how you can create a killer monitor for your own WebRTC application.

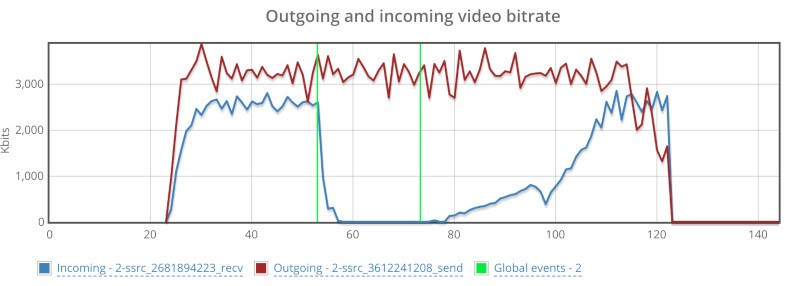

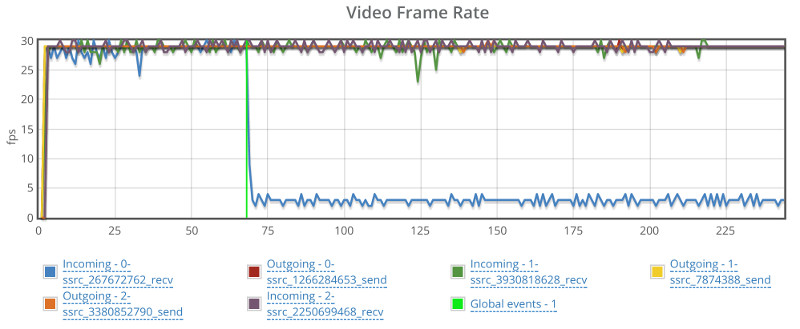

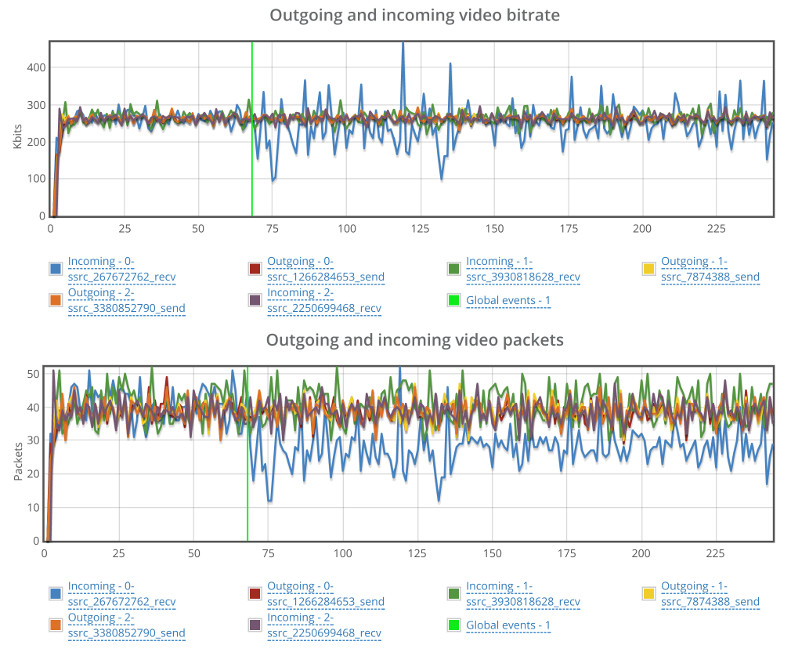

Oh – and we won’t only focus on working/not working type of scenarios. We will show you how to catch quality degradation issues of your service.

I’ll be doing it live, giving some tips and spending time explaining how our customers use our WebRTC monitoring service today – what types of problems are they solving with it.

Join me:

Creating a Kickass WebRTC Monitor Using testRTC

recording can be found here