How Blitzz shifted to self service WebRTC network testing with testRTC

The Blitzz Remote Support Software is a flexible, scalable, and affordable solution for SMBs, mid-market, and well-established enterprises. Blitzz is helping service teams safely and successfully transition to a remote environment. The three-step solution to powerful visual assistance requires no app download. Customer Care Agents can clearly see what’s happening and offer remote guidance to quickly resolve issues without having to travel to the customer.

Keyur Patel, CTO and Co-founder of Blitzz describes how qualityRTC supports blitzz.co:

qualityRTC helped us focus on what we do best, and that’s providing an easy to use solution for remote video assistance over Browser; instead of having to worry about diagnosing different network issues. We really enjoy the direct support and quick communication the team at qualityRTC has given us in setting up and further developing our integration with them.

Here’s a better way to explain it:

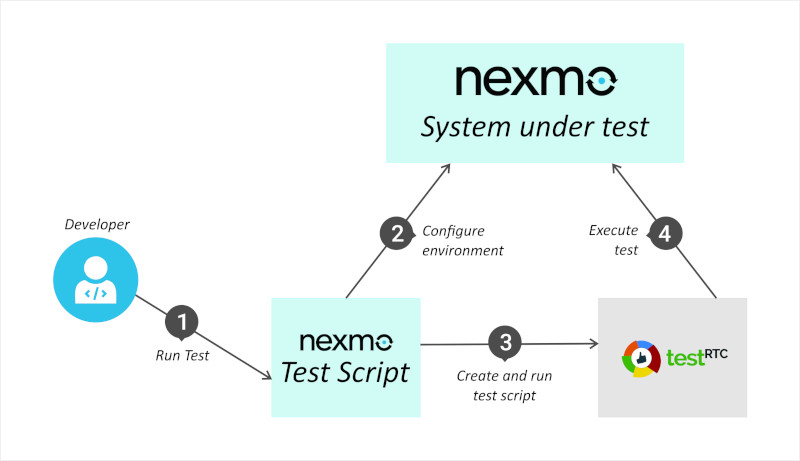

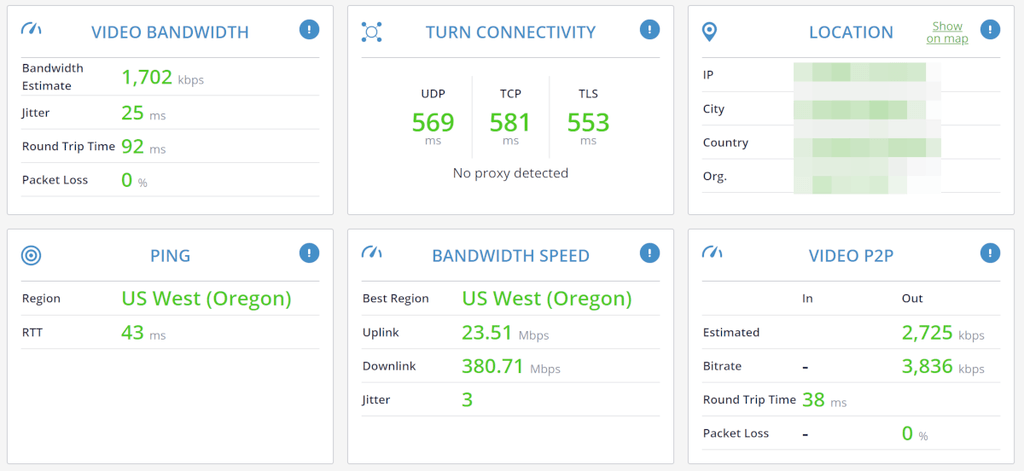

Blitzz selected testRTC’s network testing product, qualityRTC. With it, they are able to quickly assist its clients when they encounter connectivity or quality issues with the service. We’ve been working closely with Blitzz in recent months, in order to fit the measurements and tests to their needs. One of the things that were added due to this partnership was our Video P2P test widget. I thought it would be interesting to understand what Blitzz is doing exactly with testRTC, and for that, I reached out to Keyur Patel, CTO and Co-founder of Blitzz

Understanding networks and devices

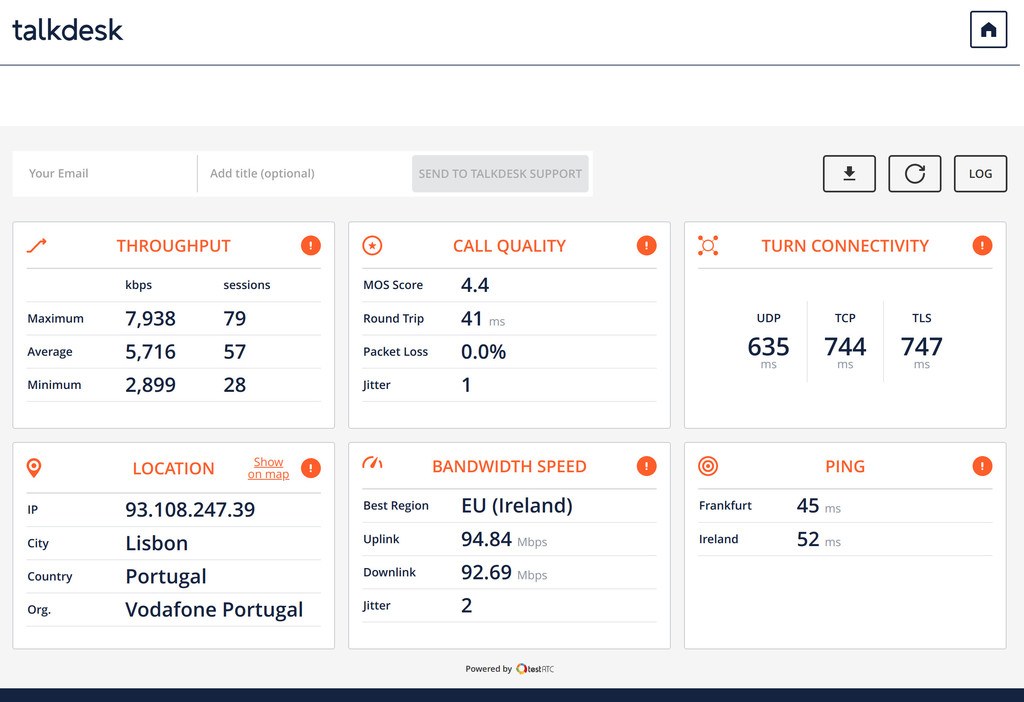

Blitzz aims to offer a simple experience. For that, it makes use of WebRTC and the fact that it is available in the browser. This makes it easy for the end users and there is no installation required for it. You can direct end users towards a URL and it will open up in their browser. The challenge though, is that with the proliferation of devices out there, you don’t control which exact browser and device is used by each user.

On the customer’s side, the agents are almost always operating from inside secure and restricted networks. They also have limited bandwidth available to them. When deploying the service to a new customer, this question comes up time and time again:

Can the agents connect to the Blitzz infrastructure?

Are the required ports opened on the firewall by the IT team? Do they have enough bandwidth allocated to them?

Finding suitable solutions

Solving connectivity issues is an ongoing effort. To that end, Blitzz were using a combination of analysis tools available freely on the Internet. These included test.webrtc.org, speed testing and the network diagnosis tool available from the CPaaS provider they were using.

This worked out well, but it was not very efficient. This process would take a couple of meetings, going back and forth, in order to collect all of the information, troubleshoot things and retries to get things done right.

It wasn’t the best experience, asking customers to go through 3 different URLs to make sure and validate that they had full connectivity.

Using qualityRTC

Keyur was aware of testRTC and knew about qualityRTC. Once he tried the tool, he saw the potential of using it at Blitzz.

After a quick integration process, Blitzz were able to troubleshoot customer issues with ease. This enabled them to provide a sophisticated service instead of gluing together multiple alternatives.

qualityRTC shined once the pandemic hit, and agents started working from home. Now the agents were running on very different networks, each in his one environment. While it was fine asking for an IT person to run multiple tools when onboarding to the service, doing that at scale increased the challenge.

By using qualityRTC, Blitzz was able to direct its customer base to a single tool. This allowed the agents to quickly and efficiently conduct these speed tests and connectivity tests, especially at times where quality of internet services was fluctuating.

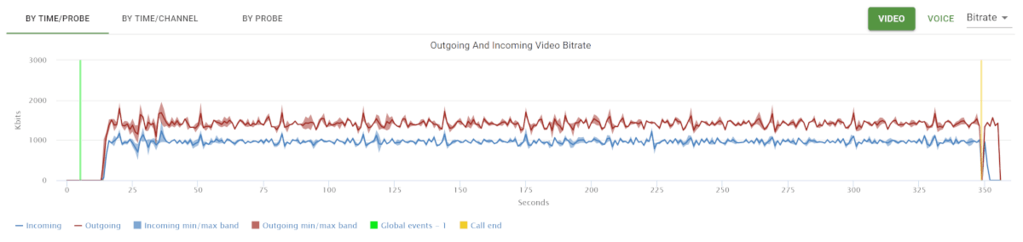

Streamlining the process

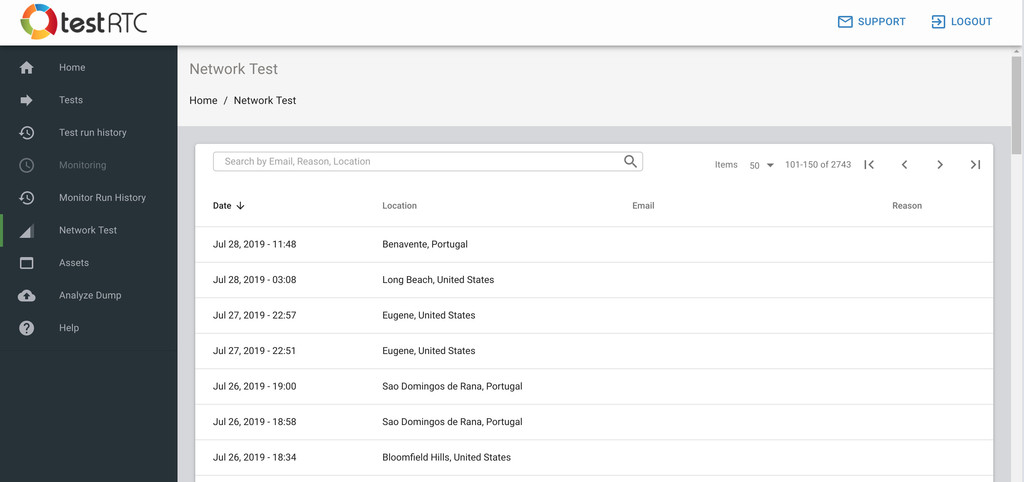

Blitzz has embedded qualityRTC in their application for most of their users to diagnose connectivity issues during a video session. This allows end users to self test and diagnose issues by looking at the results on their own. If for some reason they still had to reach Blitzz Support, Blitzz support team could quickly review the log data collected by qualityRTC from their Network Test.

qualityRTC helped Blitzz increase customer satisfaction and reduce the friction in onboarding over several thousand customer care agents in a matter of days. This also reduced the number of support tickets as end users had all the information needed for resolving connectivity issues through the qualityRTC test portal.

Today, qualityRTC is an integral part of the Blitzz solution. This enables Blitzz to offer a better customer service and experience, while maintaining lower support costs.