Console logs in watchRTC

The watchRTC SDK can also collect browser console logs. While these might be verbose, they can be quite useful to trace and resolve application related issues.

The ability to collect console logs in watchRTC is dependent on the specific plan you are on.

Table of contents

Configuring console logs collection

Since browser console logs can be quite verbose, collecting them all can affect your application performance by taking up much of the available bandwidth that is better used for the actual voice or video sessions you are conducting over WebRTC.

This is why the watchRTC SDK will filter console log messages based on their level.

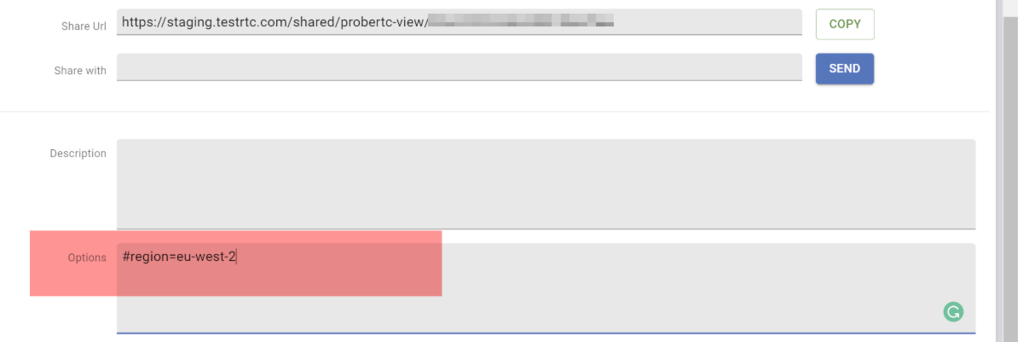

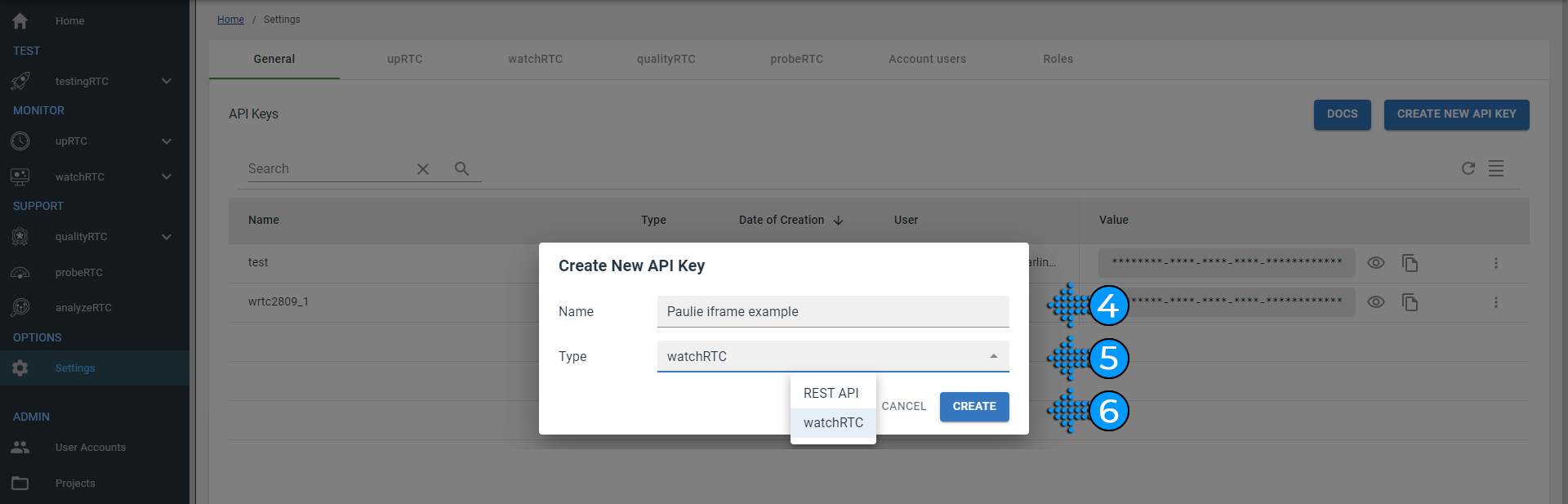

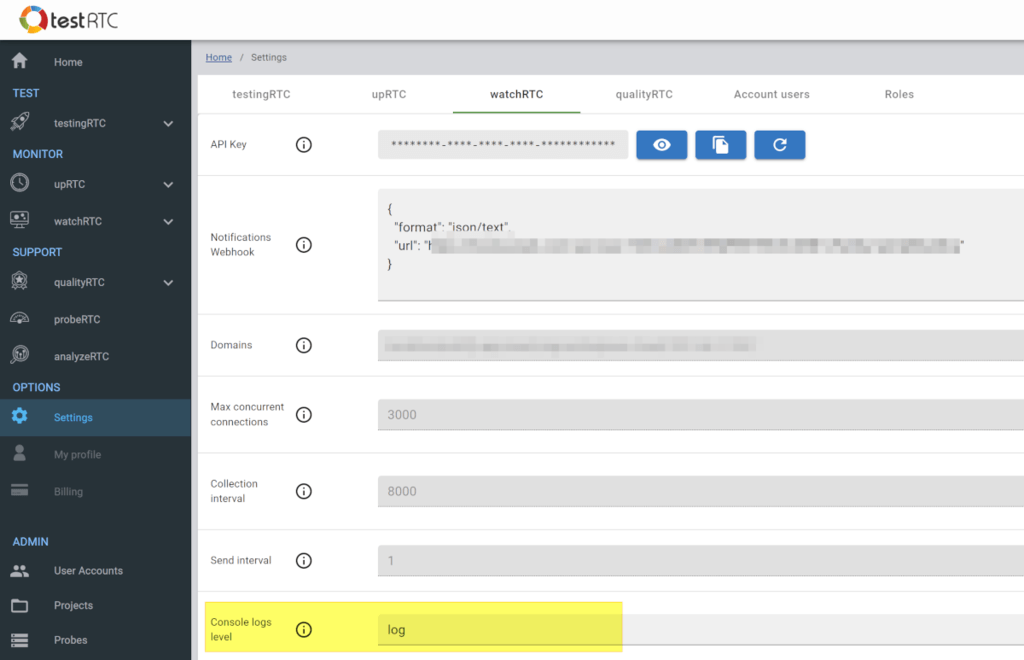

On Settings | watchRTC you can define Console logs level to “log”, “debug”, “info”, “warn” or “error”. Keep it at “warn” or “error” at all times is our suggestion.

Notes:

- In the example above, we decided to collect log level messages because we were keen in figuring out a bug and catching it in our staging environment, not deploying it to the production system

- The configuration specified here will be used by default for collecting console logs in the watchRTC SDK. You can override this for individual peers if needed

- If this field is disabled in your account then your watchRTC plan does not include console logs collection

Granular control of console log collection in the SDK

On the watchRTC SDK level, you can granularly decide to override the default collection configuration of browser console logs per peer as well as dynamically during a session.

To do that, you can use the watchrtc.init() or watchrtc.setConfig() API calls in the watchRTC SDK, providing them a console parameter:

watchRTC.setConfig({

console: { level: "error", override: true }

});

Code language: JavaScript (javascript)The above example will set the log level to “error”, overriding the configuration of the account.

Viewing console logs

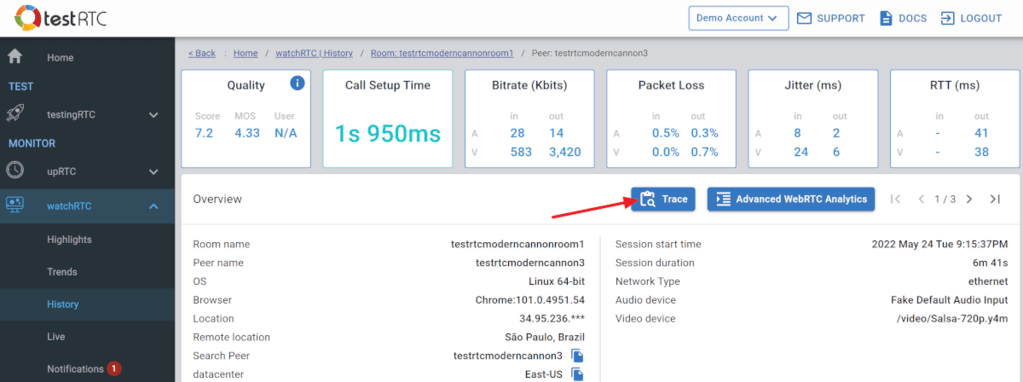

Console logs collected can be viewed as part of the Trace window of the peer level page: