How to measure re-ramp-up time for WebRTC video calls

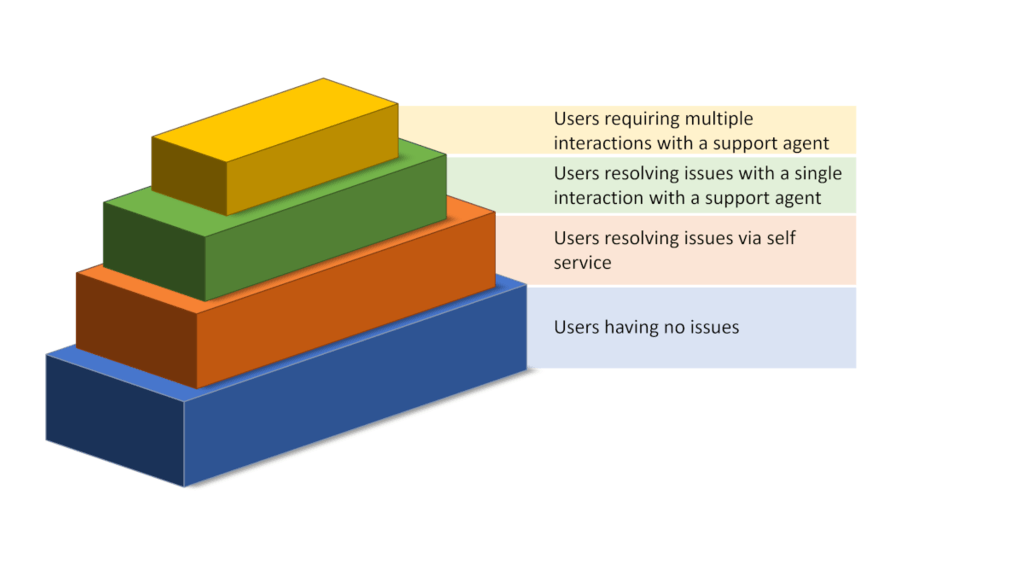

An important aspect of performance measurement is to check how long it takes for your media server to re-ramp up back to a high bitrate after experiencing poor network conditions.

Re-ramp-up time

We’d like to show you how to simulate as well as measure that re-ramp-up time successfully with testRTC.

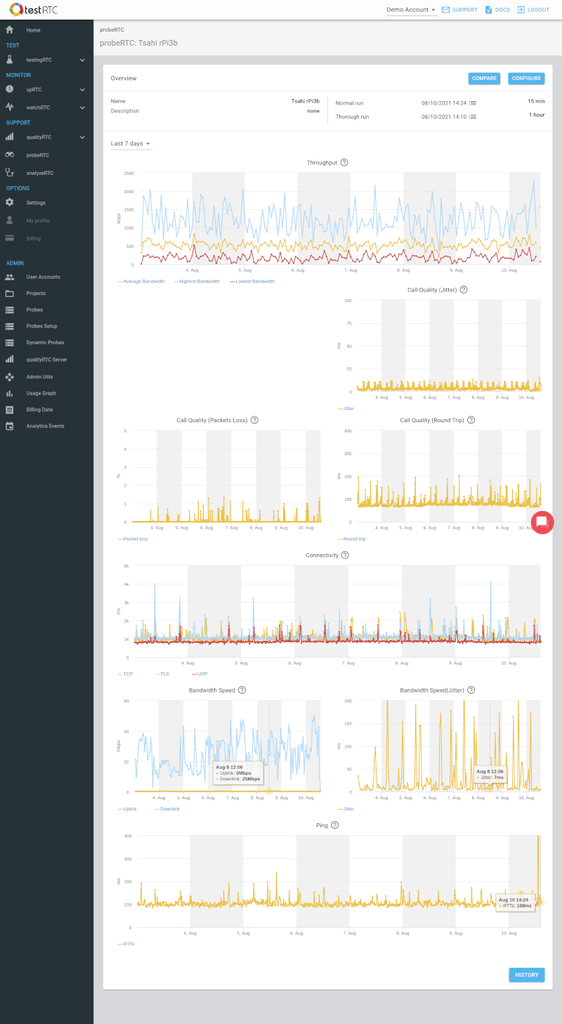

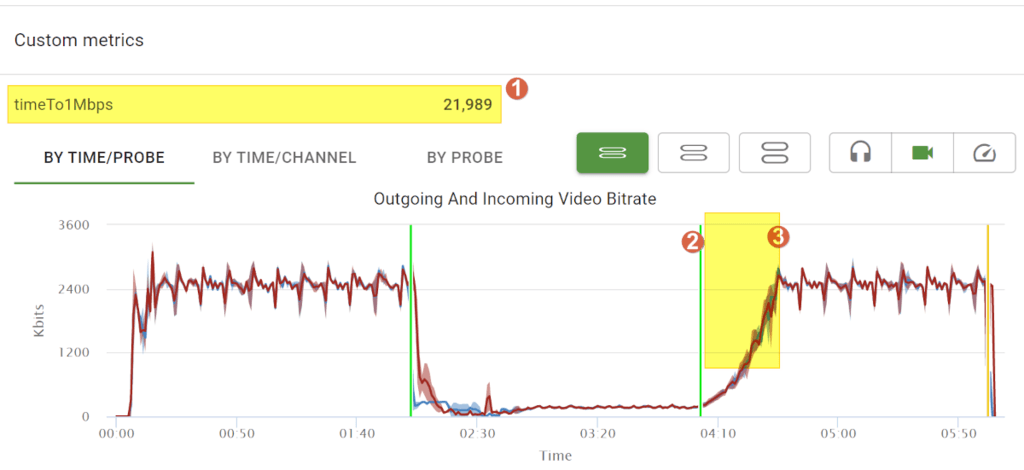

Here is how the result looks like:

We’ve added some numbers to the image to focus on what has been added:

- A custom metric that calculates the re-ramp-up time to a metric called timeTo1Mbps. In the example above, it took 22 seconds

- An event that is placed to indicate the start time for the measurement. In our case, when we “unthrottle” the network

- The time that passes until the bitrate goes up again. In our case, we are interested in the time it takes to reach 1mbps

This is an important measurement to understand how your media server behaves in cases of poor network conditions – these conditions are often dynamic in nature and we’d like to make sure the media server adapts to them nicely.

The above screenshot (and the sample used here) was taken using AppRTC – Google’s peer-to-peer sample. You can see that the initial ramp up takes less than 5 seconds, but re-ramp-up takes over 20 seconds. Both of these values are going to be a lot worse for most media servers.

You will find the full test script used at the bottom of this article.

Explaining what we are doing

Once a session is connected, we are going to do the following:

- Called .rtcSetMetricFromThresholdTime() to let testRTC know we want to measure re-ramp up time. We’ve indicated the event marked below and gave the expectation of “video.out.bitrate > 1000”

- Wait for 2 minutes for the connection and bitrate to stabilize itself

- Introducing poor network conditions. That is done by using .rtcSetNetwork(). In this test script, I arbitrarily chose to limit bitrate to 300kbps

- Wait for 2 minutes under the poor conditions

- Mark the time using .rtcEvent() and unthrottle the network. We did this by calling .rtcSetNetworkProfile() with an empty profile

- Wait for 2 more minutes to give the connection the time to re-ramp up and stabilize

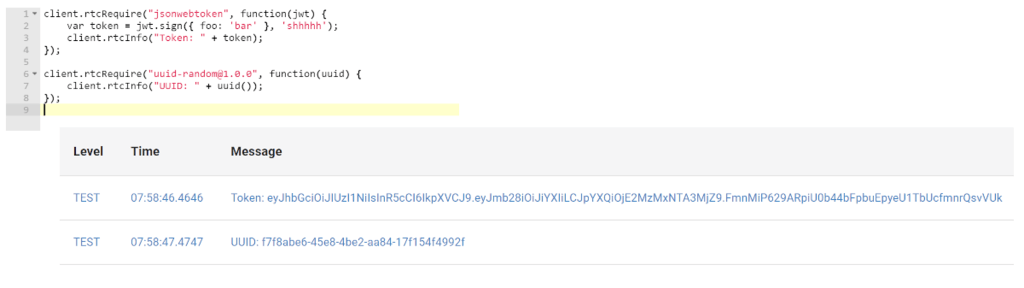

Here’s the main code pieces out of the whole script:

client

.rtcSetMetricFromThresholdTime("timeTo1Mbps", "Good", "video.out.bitrate > 1000", "sum")

// **** ACTUAL VIDEO CALL ****

.pause(2*60*sec)

.rtcSetNetwork({

outgoing: {

packetloss: 0,

jitter: 0,

latency: 0,

bitrate: 300

},

incoming: {

packetloss: 0,

jitter: 0,

latency: 0,

bitrate: 300

}

})

.rtcEvent('Bad', 'global')

.pause(2*60*sec)

.rtcSetNetworkProfile("")

.rtcEvent('Good', 'global')

.rtcScreenshot("in call")

.pause(2*60*sec);Code language: JavaScript (javascript)A few notes here:

- Notice that the above is only implemented for one of the probes in the session and not on all of them. This allows us to observe how the other probes are affected and what the media server does in such cases

- You can place the measurement on the receiving end instead of the sender and change it to “video.in.bitrate > 1000”

- The actual bitrate we want to reach is arbitrary here and depends on the use case

- We could also use it to measure ramp up time at the beginning of the session and not only after bad network conditions

Test Script Used

We’ve set the service URL to https://appr.tc and used the followingscript with 2 probes with a session size of 2:

/*

This example measures recuperation time after poor network conditions

SCENARIO

* Browser 1 connects a session

* Browser 2 joins the room

* Browser 1 waits for 2 minutes

* Browser 1 changes to a Poor 3G network for 2 minutes

* Browser 1 removes network restrictions for 2 more minutes

* Browser 2 measures the time for recuperation

*/

// Variables that we will use in this example

var probeType = Number(process.env.RTC_IN_SESSION_ID);

var sec = 1000;

if (probeType === 1) {

// Browser 1 actions take place here

// Open initial AppRTC URL and join a randomly allocated room

client

.url(process.env.RTC_SERVICE_URL)

.waitForElementVisible('body', 10*sec)

.pause(1000)

.click('#join-button')

.waitForElementVisible('#videos', 10*sec)

.pause(2*sec)

.rtcScreenshot("Alone in call")

// Send the room URL to the second browser

.url(function (result) {

this.assert.equal(typeof result, "object");

this.assert.equal(result.status, 0);

var roomUrl = result.value;

this.assert.equal(!!roomUrl, true);

client

.rtcInfo('Sending Room url %s', roomUrl)

.rtcProgress("Waiting @ " + roomUrl)

.rtcSetSessionValue("roomUrl", roomUrl);

})

.rtcSetMetricFromThresholdTime("timeTo1Mbps", "Good", "video.out.bitrate > 1000", "sum")

// **** ACTUAL VIDEO CALL ****

.pause(2*60*sec)

.rtcSetNetwork({

outgoing: {

packetloss: 0,

jitter: 0,

latency: 0,

bitrate: 300

},

incoming: {

packetloss: 0,

jitter: 0,

latency: 0,

bitrate: 300

}

})

.rtcEvent('Bad', 'global')

.pause(2*60*sec)

.rtcSetNetworkProfile("")

.rtcEvent('Good', 'global')

.rtcScreenshot("in call")

.pause(2*60*sec);

} else {

// Browser 2 actions take place here

// Wait for Browser 1 to send us the URL for this call

client

.rtcWaitForSessionValue('roomUrl', function (urlToJoin) {

client

.rtcInfo('Joining ', urlToJoin)

.rtcProgress("Joining " + urlToJoin)

.url(urlToJoin)

.waitForElementVisible('body', 30*sec)

.pause(2*sec)

.rtcScreenshot("Joining")

.click('#confirm-join-button')

.waitForElementVisible('#videos', 10*sec);

}, 30*sec)

// **** ACTUAL VIDEO CALL ****

.pause(3*60*sec)

.rtcScreenshot("in call")

.pause(3*60*sec);

}

client

.rtcProgress("Bye");Code language: JavaScript (javascript)