VoIP Network Tests in the era of WebRTC

Not sure what got to me last week, but I wanted to see what type of network testing for VoIP exists out there. This got me down memory lane to what felt like the wild west of the 90’s world wide web.

You can do that yourself! Just search for “voip network test” on Google and check what the tests look like. They come in exactly two shapes and sizes:

- A generic speed test

- Download a test app

None of these methods are good. They are either incorrect or full of friction.

The ones hosting these network tests are UCaaS vendors, trying to entice customers to come their way. The idea is, you run a test, and they nicely ask you how many phone lines you’d like a quote for…

So what’s wrong with that?

1. Generic speed tests aren’t indicative of ability to conduct VoIP calls

Most of the solutions I’ve found out there were just generic speed tests. Embedding a network test page of a third party or going to the length of installing your own speed testing machine, which is fine. But does it actually answer the question the user wants answered?

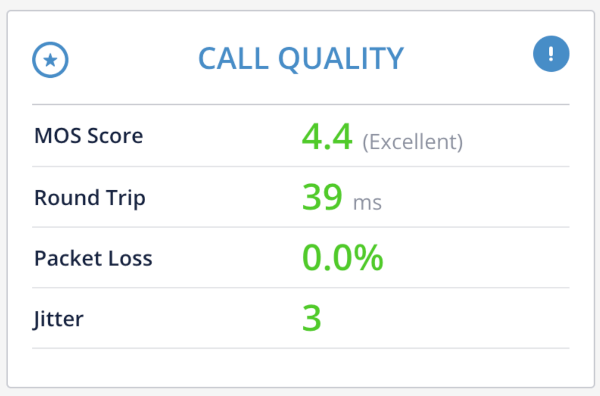

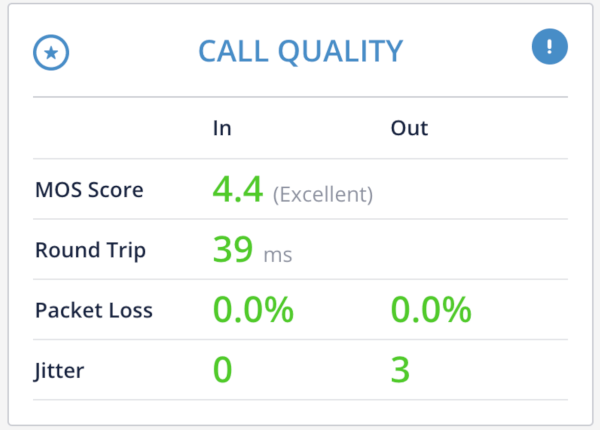

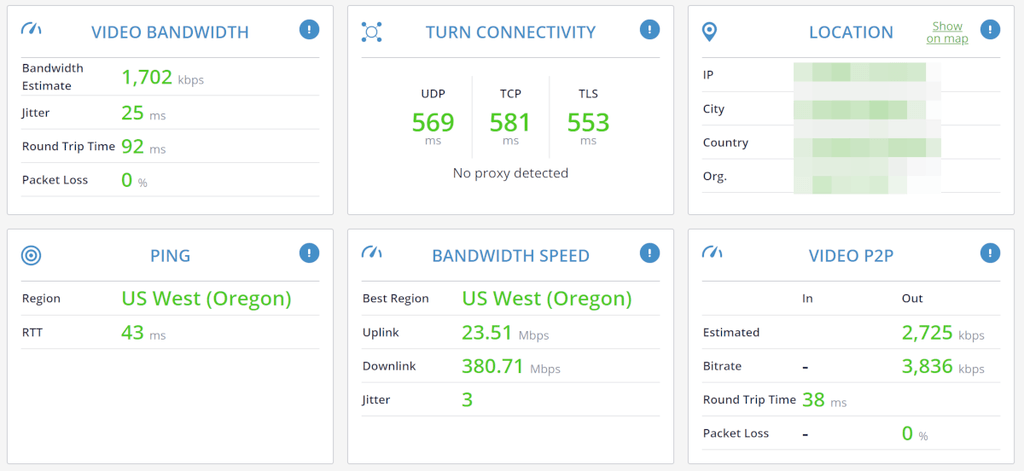

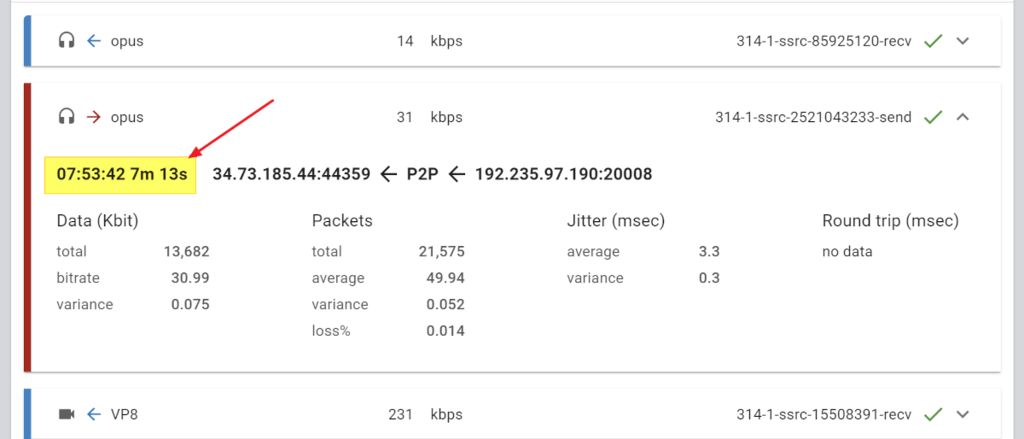

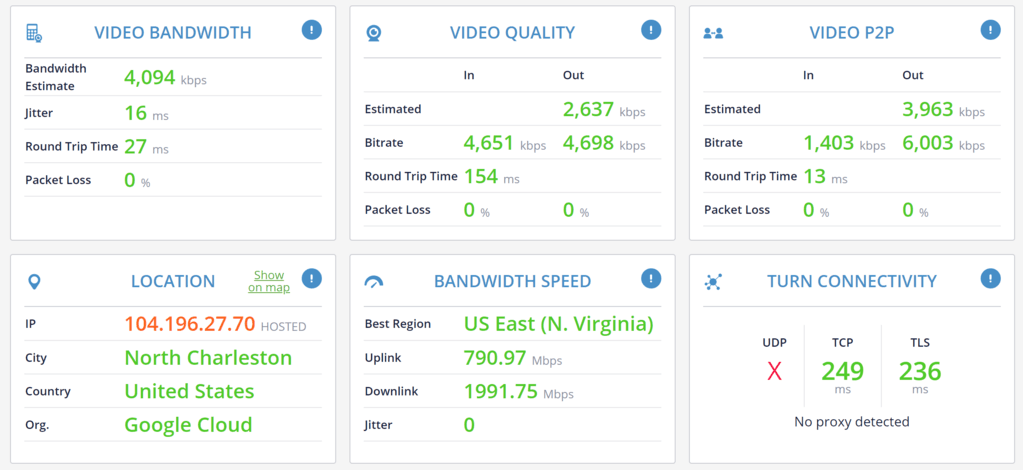

Here’s an interesting example where bandwidth speeds are GREAT but support for VoIP or WebRTC – not so much:

I’ve used our Google Cloud machines to try with. It passes the speed test beautifully. What does that say about the quality I’ll get with it for VoIP? Not much.

For that same device on the same network, I am getting blocked over UDP. VoIP is conducted over UDP to maintain low latency and to handle packet losses (which happen on any network at one point or another).

This isn’t limited only to wholesale blocking of UDP traffic. Other aspects such as the use of a VPN, throttling of UDP, introduction of latency, access to the media devices – all these are going to affect the user’s experience and in many cases his ability to use your VoIP service.

2. Downloading test apps is not what you expect to do in 2021

In some cases, speed test services ask you to download and install an application.

There’s added friction right there. What if the user doesn’t have permission to install applications on his device? What if he is running on Linux? What if the user isn’t technically savvy?

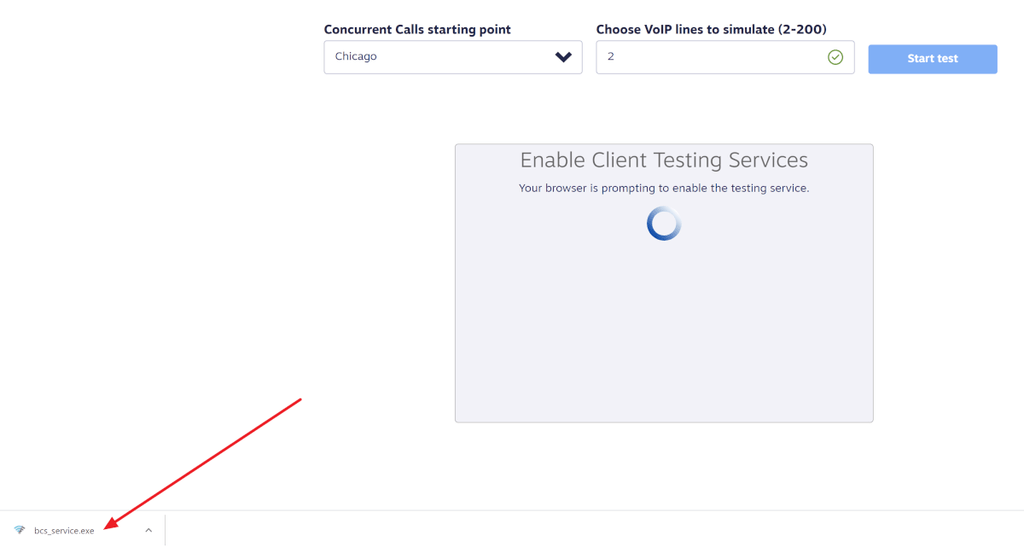

I tried out one out one of these so called downloaded speed tests.

I clicked the “Start test” button. After some 10 seconds of waiting, it downloaded an executable to my machine. No further prompts of explanations given.

That brought the Windows 10 installation screen, with a name different than that of the vendor whose site I am on.

Deciding to install, I clicked again, only to be prompted by another installation window.

Next clicks? EULA, Opt-in, Folder selection, Finish

So… I had to agree to an EULA, actively remove an opt-in, select the folder to install (had a default there), reminded that it is running in the background now (WHY? For what purpose?), and then click on Finish.

It got me results, but at what cost and at what friction level for the end user?

In this specific case – before I even made a decision to use that service provider. And I had to:

- Click on 6 buttons to get there

- Sign a legal document (EULA)

- Opt out from something (so it won’t leave ghosts on my machine)

- Remember to go and delete what was downloaded

And there’s the challenge here of multiple popups and screen focus changes that took place throughout the experience.

The results might be accurate and useful, but there are better ways.

What to look for in a VoIP network test?

There’s a dichotomy between the available solutions out there: they are either simple to use and grossly inaccurate, or they are accurate and complex to use.

Then there’s the fact that they answer only a single question – is there enough bandwidth. Less so to other network aspects like firewall and VPN configurations.

From our own discussions with clients and users, here’s what we learned in the last two years about how VoIP network tests should look like:

- Simple to use

- Simple for the end user to start the test

- Simple for the support/IP person to see the results

- Simple to read and understand the results

- Specific to your infrastructure

- A generic test is great, but isn’t accurate

- Something that tests the network needs to test your infrastructure directly. If that’s impossible, then the best possible approximation to it

- Supports your workflow

- Ability to collect data you need about the user

- Easily see the results on your end, to assist the client

- Customizable to your business processes and use cases

Check qualityRTC

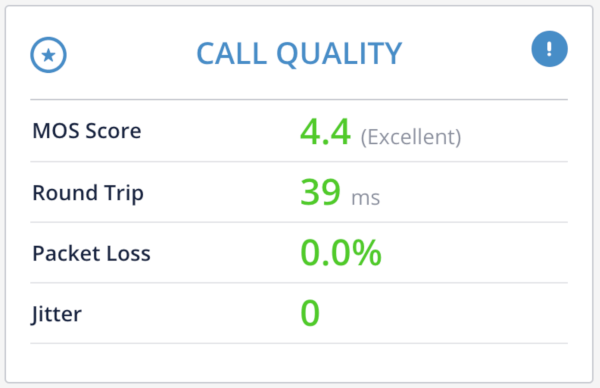

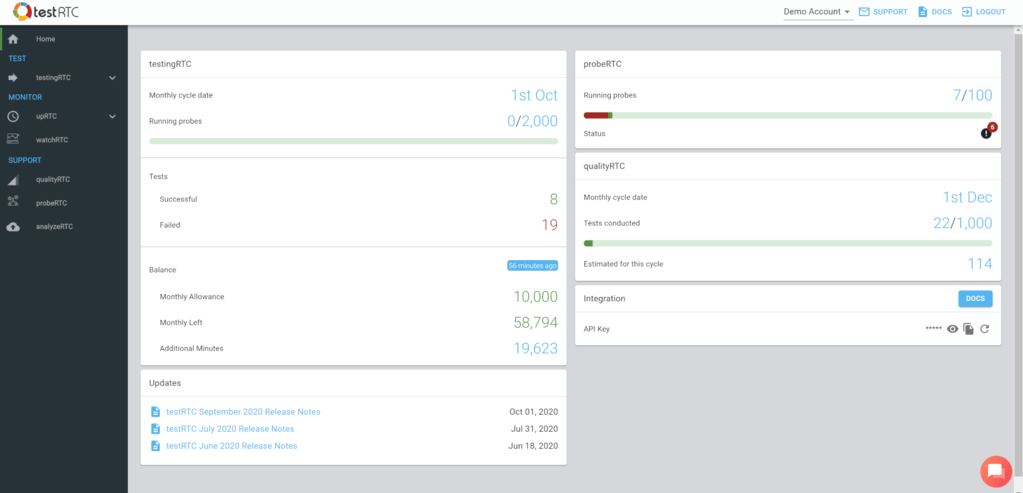

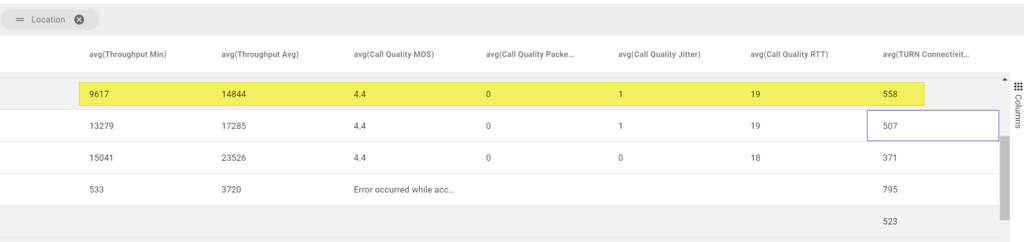

In the past two years or so we’ve been down this rabbit hole of VoIP network testing in testRTC. We’ve designed and built a service to tackle this problem, with a lot of help from our customers, we’ve improved on it and still are, to the point where it is today:

A simple to use, customizable solution that fits to your infrastructure and workflow

Within minutes, the user will know if his network is good enough for your service, and your support will have all the data points it needs to assist your user in case of connectivity issues.

Check out our friction-free solution, and don’t forget to schedule a demo!

Let's talk about you

Get a personalized demo of the testRTC Platform