Integrate a webhook at the end of a monitor and test run

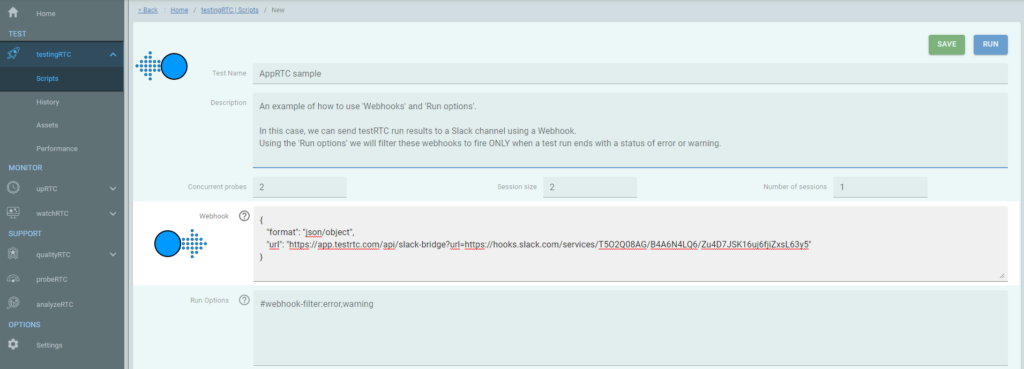

You can define a webhook that will be called at the end of a monitor and test run execution. In order to define the ‘end of test/monitor webhook’, you will need to add the required webhook information to the test configuration page, under the ‘Webhook’ parameter.

Read Webhooks in testRTC for more information on webhook use.

Tip: You may also choose to Integrate a webhook filter should you wish to invoke a webhook only in certain conditions.

Supported webhook structures

There are two types of ‘end of test/monitor webhook’ field structure supported:

- Standard (see example below)

- Advanced (custom body templating)

Webhook testing example

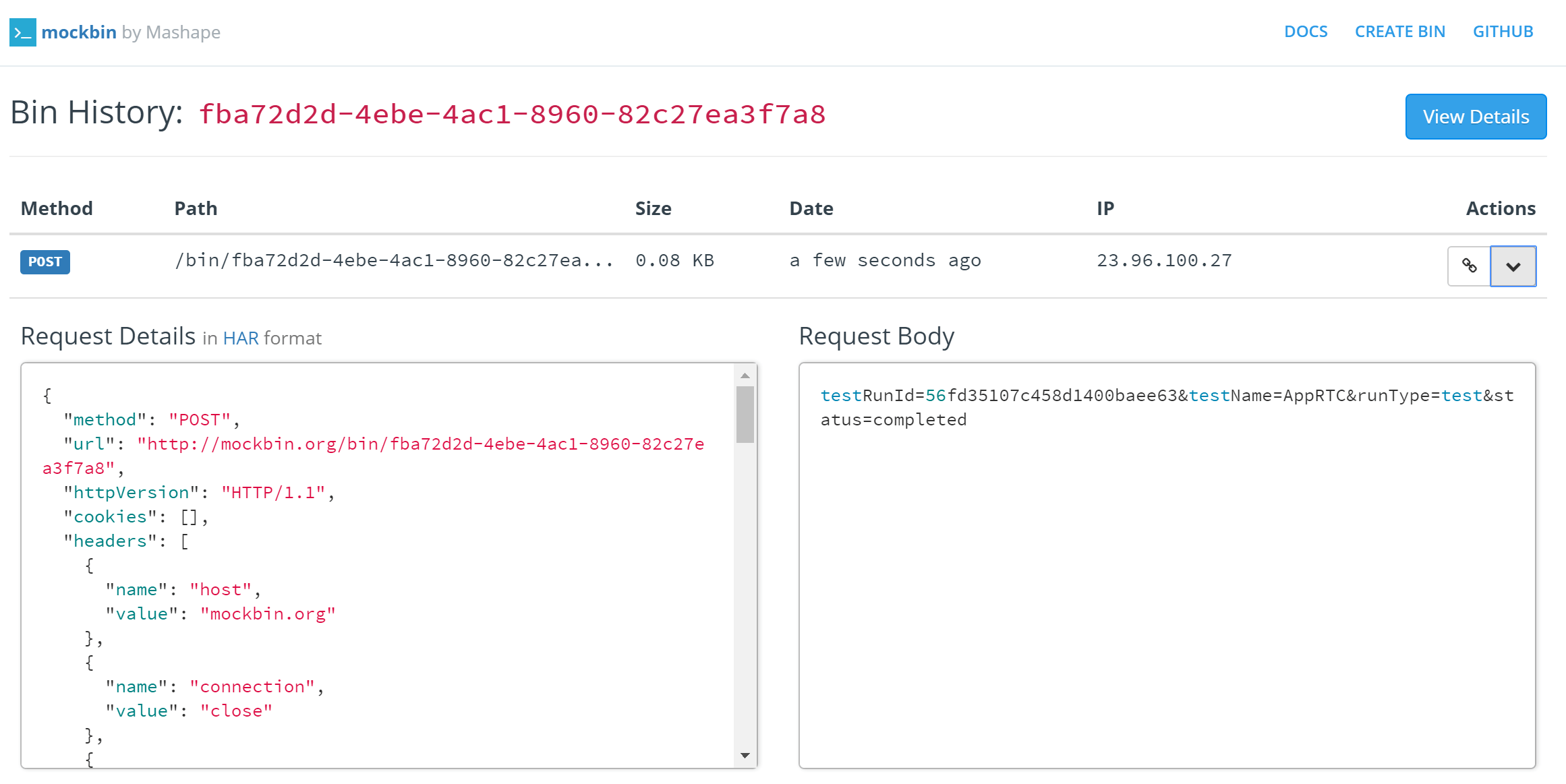

| Successful test execution | testRunId=56fd35107c458d1400baee63&testName=AppRTC&runType=test&status=completed |

| Failed test execution | testRunId=56fd3d617c458d1400baee6f&testName=AppRTC&runType=test&status=failure |

To view an example webhook using the above data, do the following:

- Generate a custom endpoint in Mockbin – in http://mockbin.org/ click on ‘Create Bin’

- In the ‘Bin Builder’ page, leave all suggested default parameters and just click on ‘Create Bin’ at the bottom-right area of the page.

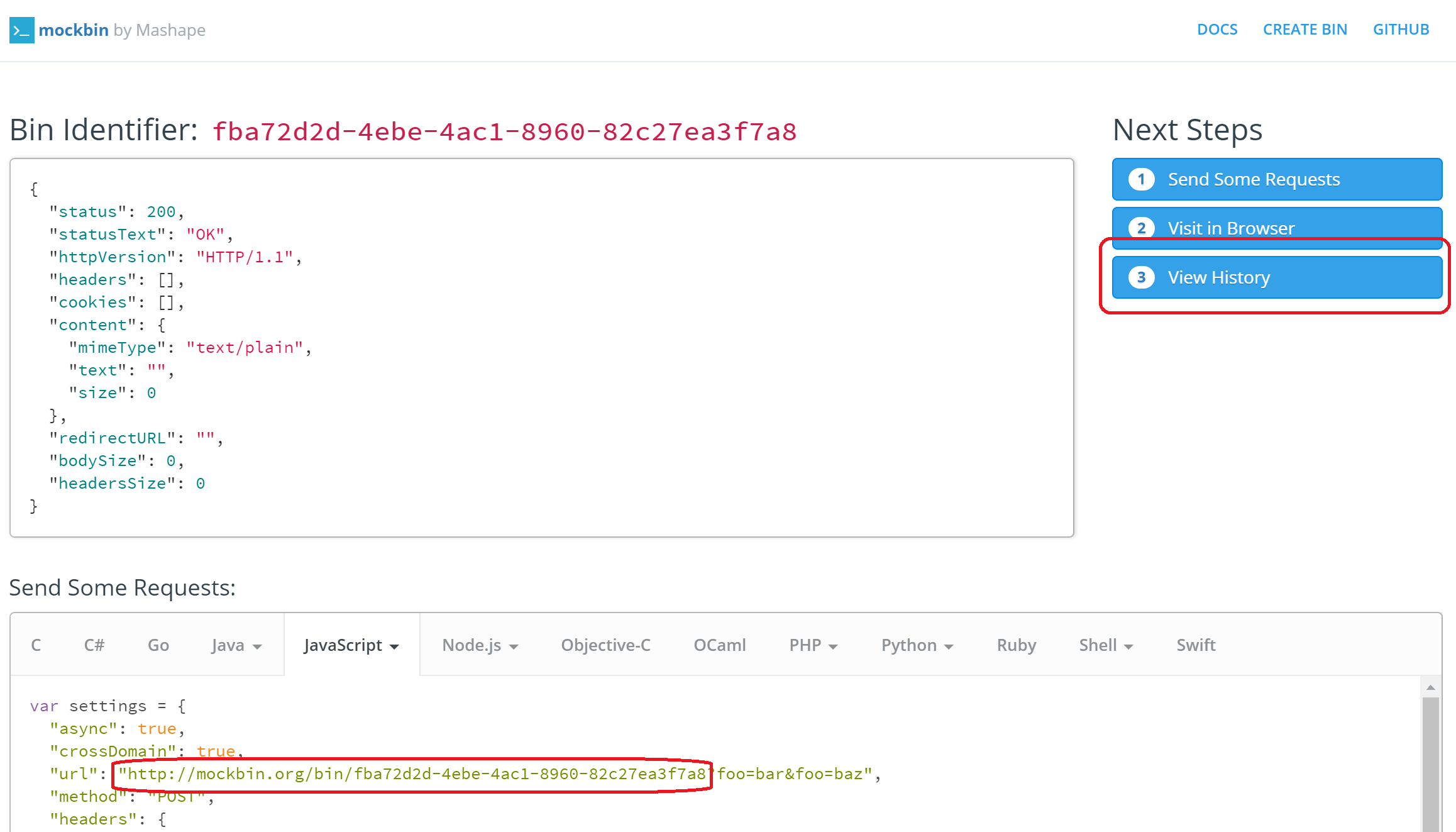

- In the next page your temporary created Bin Identifier will be presented. Your URL should be http://mockbin.org/bin/[Bin Identifier] You can generate this URL yourself or copy it from the code samples at the bottom of the ‘Bin Identifier’ page.

- Note: If you copy the URL from the codesample, you should take off the URL parameters at the end of the URL (remove “?foo=bar&foo=baz”)

- Add the URL to your test – in the test configuration page under Webhook (for example):

http://mockbin.org/bin/5b76146c-37da-40ef-bf01-89043b0d6a75 - Click on ‘View History’ to go to the Mockbin Bin History page

- Run the test

- After the test was ended refresh the Mockbin Bin History page

- You should find a new entry in the history. Click on the new entry history entry to see the request details and body:

Advanced

The advanced Webhook definition supports a complete JSON data like:

{"url": "http://mockbin.org/bin/4cb03fa6-d1aa-4533-a857-11d90293a4e8", "headers": {"custom-webhook-header": "web-testrtc-webhook"}}If a webhook exists for a given executed test or monitor, testRTC manager will call that webhook after the execution of the test with the following information:

- Type (monitor | test)

- Test name

- Test run ID

- Execution status

For further information about the JSON data format and available parameters, please refer to https://github.com/request/request

Related information

- A nice utility to validate your JSON structure: http://www.jsoneditoronline.org

- For Slack integration check this article.

- We support different webhook formats